Oracle Exalytics Week : How Does it Perform, and How Can I Test It?

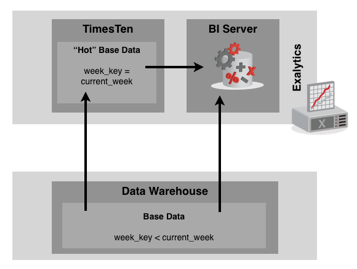

This week we've been looking in-depth at the new Oracle Exalytics In-Memory Machine, the new Exa-appliance from Oracle aimed at the BI tier in an Oracle BI/DW system. We've looked at what problem Exalytics looks to solve, how it's architected and how TimesTen provides the in-memory database cache that makes it all possible. Yesterday, we took a look at the Summary Advisor that recommends, and then creates a script for generation of these aggregates, and we've seen the BI Server uses these aggregates to make queries run faster. Here's a quick recap on the other postings in the series:

- Oracle Exalytics Week : The Case for Exalytics

- Oracle Exalytics Week : Introducing Oracle Exalytics In-Memory Machine

- Oracle Exalytics Week : Exalytics & TimesTen - Under the Covers

- Oracle Exalytics Week : The Summary Advisor

- Oracle Exalytics Week : How Does it Perform, and How can I Test It?

So, the $64,000 question - how well does it work? Well, we can probably divide this into two sections, once of which we've got some thoughts on, and the other we'll have to wait a while for. Let's start with the first area then - how well does it all fit together, and how practical is it to use the Summary Advisor and TimesTen?

The basic idea behind the summary advisor is to use additional statistical data on queries to recommend aggregates, which are then implemented by the same process that creates aggregates for the Aggregate Persistence Wizard. The recommendation process works well, but you'll need to make sure your repository is "just right", and be prepared to work though issues that crop-up during the deployment process, before things run smoothly. Some general recommendations for using the Summary Advisor include:

- Start by generating and testing single aggregates at a time, as failures in nqcmd script execution cause the whole process to fail and you then have to "un-pick" the half-created aggregates from your repository. Focus on one or a few facts at a time, get each aggregate creation working OK, and then build up from there. Don't run the wizard for all facts and all time periods, select all recommendations and then try and implement them all in one go, as it's most likely it'll fail.

- Similarly, constrain your time selection to a representative range, maybe only considering the past couple of months of data, not all statistics over all time

- Make sure your repository, and source data, are "clean" before you run the Summary Advisor, by running the "Estimate Levels" feature to help spot non-unique dimension keys, and make sure any errors or relevant warnings are cleared in the repository before you make a start

There are also some general restrictions on what repository features can't be used with the Summary Advisor (this applies to the Aggregate Persistance Wizard, as well). For example, you can't use logical dimensions with skip-level or ragged hierarchies, or with value-based hierarchies, and there are restrictions around degenerate dimensions, non-additive dimensions and the like.

In practice, we found that the process of implementing the aggregates generated by the Summary Advisor on a moderately complex repository quite labour-intensive at first, until you work through issues in that particular repository and can get aggregates deploying smoothly. Once the aggregates are implemented and registered in the repository, refreshing them is relatively straightforward, though this means making structural changes to the repository online, which shouldn't really be done whilst users are querying the system. Our feeling is that this is something you'd do overnight, along with the main data warehouse load, with a fresh set of recommendations generated every so often, particularly when usage patterns, and data distribution and skew, change.

Of course as an alternative to loading just recommended aggregates into TimesTen, you could load data into it yourself, using techniques such as:

- Using the Aggregate Persistence Wizard to manually select and implement your own aggregates

- Using a tool such as ODI to ETL data, possibly at more detailed-level, into TimesTen

- Or even replicating your data warehouse data into TimesTen using GoldenGate, in real-time

All of these are interesting possibilities, and ones we're trying-out and prototyping. They've all got their own restrictions and issues though; for manually created aggregates, in theory the Summary Advisor should have recommended any that are worth using, so you'll need to double-check that these are really of value. For ETL'ing data into TimesTen, you'll then need to properly map this data into the repository, which may require additional logical table source, fragmentation or other clauses, and other non-trivial settings. If you plan to replicate your data warehouse, or part of a data warehouse (say, the most recent data) into TimesTen, you'll need to bear in mind that whilst there's a terabyte of RAM on the server, once you take out the memory required for the OS and applications, and the matching temp space that TimesTen usually requires, you're really only looking at around 400GB of usable RAM space, which is why Oracle went down the hot spot/aggregations route in the first place.

So, on to the second question - what's performance like in practice? Well, we've been performing tests on the software behind Exalytics for some months now, but we can't properly test it until we get hold of the hardware + software, as you can't really model 1TB of RAM and 40 cores on a laptop, and certain optimizations in OBIEE are designed for use specifically with the Exalytics hardware. So, to really give Exalytics the once-over, and to provide a platform for customer training, and for customers to try their own data and dashboards with Exalytics, Rittman Mead are investing in one of the first Exalytics boxes in Europe, and hosting proof of concepts, and demo days, down at our office in Brighton. If this is something that would interest you, and you'd like to road test Exalytics with your own data, drop us a line and we'll let you know when the box is installed and up and running.

For now though, and until our box arrives, that's it for us on Exalytics. Keep an eye out in March for the first real-world performance figures, and over the next couple of weeks for details on our implementation, training and support services around Exalytics.