Days Two and Three of an OBIEE Project : More Data Modeling

The other day I posted some notes on the first day of an OBIEE project, I'm just on the train back now after finishing the next couple of days, I thought I'd post some notes on how these two following days went as some interesting things came up during the two days of development.

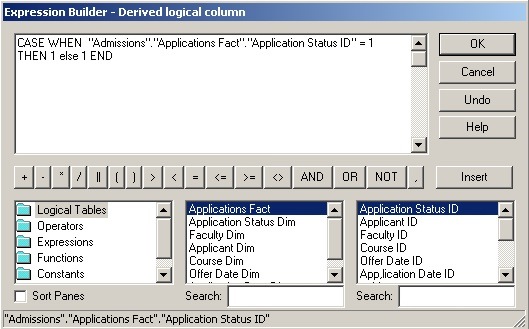

The first issue we came across related to the technique I talked about in the previous post, where we broke out measures by a "scenario" or in our case, an "application stage" dimension. The original problem we had was that our fact table source had a set of measures that were dimensioned by an application stage dimension, and the client wanted a selection of these measures by application stage listed across the report. If they wanted all of the measures by a selection of application stages, or a selection of measures by the same set of application stages we could have used a crosstab and had application stage as one of the dimensions, day of month (say) as the other and we'd have been fine, but as the report used an arbitrary selection of measures and application stages, the only way around this was to "pivot" the fact table by the application stage and derive out the measures using a CASE statement, like this:

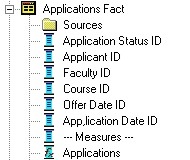

This would then lead to this measure appearing in the logical fact table as a calculation, rather a normal logical column mapped to a physical column. Notice in the list of measures below how it appears using the formula icon.

All of this worked well when displaying data from the fact table in detail form, i.e. with one report row per row in the fact table, which in my case equated to each event that was recorded against the course application. The problem came though when I needed to aggregate the data up to the day, or month or whatever level - the derived measures wouldn't aggregate. If you go to edit the properties of the derived measure, the Aggregation tab is greyed out, and if you try and wrap the CASE statement with a SUM() aggregation, OBIEE complains that you're using a deprecated method of aggregating measures - basically you can't try and slip in a "double aggregation" by adding the SUM() clause to the logical column definition.

What you can do is to edit the column formula in the Answers report and add your own SUM() clause around the column definition, this seems to trick OBIEE in to adding the additional aggregation and your measures then roll up properly in to day, month and so on. But for us, this was going to be a bit complicated for end-users to understand - some measures aggregate properly, some require manual intervention, so we took a different route and created a view over the original fact table that broke out the measures in the same way, re-mapped the logical fact table to use this view instead and the measures then aggregated with no problem, as OBIEE just saw them as regular table or view columns.

We ended up doing this sort of "substituting OBIEE data transformation techniques with SQL views" thing at several points in the build, mostly when we were trying to do something complex - like, say, mapping an aggregate table on to a logical table that itself was derived from the fact table plus some calculated columns - which confirmed to me that to be good at developing using OBIEE, you've got to be pretty good with both the OBIEE tool and the underlying database underneath. In some circumstances, it makes sense to transform data and create calculations using the OBIEE Administrator tool, in other circumstances you're better off doing the transformations and calculations in a view or an ETL process and then mapping OBIEE on to this updated physical data model. My advice in terms of getting data together for OBIEE is to try and do as much work as you can in the physical data source - ideally, transforming your OLTP data into a denormalized star schema - so that the mapping you create in the OBIEE metadata model is as simple as possible. You can certainly do a lot of clever things in OBIEE to transform normalized data, but the more abstractions you heap on top of other abstractions the more chance their is that OBIEE will trip up at some point and say that this certain combination of transformations isn't valid. A lot of this is just trial and error, and experience you get from doing a few implementations, I guess the advice I'd give is to be flexible around your use of OBIEE transformations, creating views and materialized views over your source data and using ETL code to tackle more complex transformations, in the implementations I've worked on we usually use a mix of all three techniques.

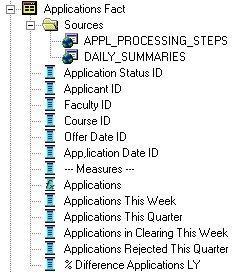

The other interesting issue we had was around the time-series calculations we needed to create. On projects I've worked on in the past, we've typically had, say, a Vale measure that recorded the value of a particular transaction sale. When these were aggregated up to the day level, the measure showed the total sale value that day. If you then use the TO_DATE and AGO time series functions in OBIEE you can calculate that measure rolled up to a particular month, quarter or year, or for a particular month, year or quarter (or any other time period) offset. For this project though, the time offsets were pretty arbitrary - one measure had to be calculated based on a 30 day period offset by a number of days before the day of the report, others had complex conditional clauses where you could count an application if it was current but not declined except if the applicant was in clearing or something similar. One of the measures didn't actually relate to applications at all, or at least it couldn't be derived from individual application activity records, and so the decision was taken to not to try and derive these measures from the fact table, but to create a summary table, with a granularity of one row per day, that we'd then populate either through some Oracle SQL (potentially using analytic functions or subquery factoring), PL/SQL or Oracle Warehouse Builder. We'd then map this summary table on to the existing logical fact table, like this:

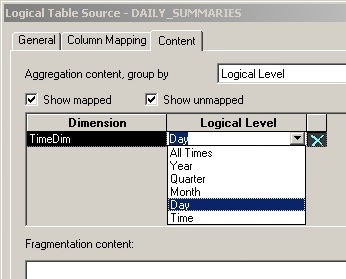

Then we'd make sure we'd defined a dimension over the Times logical dimension table that had a day level, and tell OBIEE to only use it at certain levels of aggregation.

Answers would only then show these day-level figures when a report was generated at that level, but the measures would appear in the same fact table as all the detail-level measures, giving the impression to users that it's all part of the same data set. One thing we gave a bit of thought to was how to populate this summary table - we could either write some PL/SQL or use an OWB mapping to populate it overnight, which would have the benefit of, once the table was populated, providing access to these aggregates very quickly, but would have the drawback of the values only being updated once a day. We could use a materialized view using fast refresh, which might potentially give us a combination of up-to-date data and stored totals, or we could try and generate the total dynamically using a view. One thought we did have was to use the Fragmentation feature of OWB to get the best of both worlds - we could use PL/SQL or OWB to create an overnight set of totals and then combine this with an SQL view over todays data, using Fragmentation, to provide fast access to historical aggregated data todays data as well, with totals generated on the fly, if more current data was needed.

Anyway, what we then found was once we'd cracked this main fact table, which pretty much contained all the data on their main business process, the rest of the model came easily. Taking a look through some of the other reports needed, we noticed that we could satisfy all of them by adding a couple of extra dimensions to this main fact table, and taking one of the dimensions - Faculty, say - down to a lower level, School. This was pretty straighforward to do; we amended the SQL view that provided the fact table data to include the extra dimension keys, re-imported it into the repository, the foreign key links were remembed by the tool, we then generated the other dimensions and mapped those on to the model. In terms of how the time broke down, over the three days it was about 25% initial analysis of the business process and sample reports, 50% producing the first, main fact table, and then the remaining 25% was spent either enhancing this fact table, adding extra dimensions or the odd small fact table that ran alongside the main one. I've done a fair few OBIEE implementations now and this one reinforced my view that to be good with OBIEE, you need to have three main skills

- Knowledge of OBIEE, specifically how the physical to logical model translation works, how to use Logical Table Sources, how to derive measures, how to create joins in the Logical and Physical models, and in particular (and this is where experience on deployments, rather than demos comes in) knowledge of all the little quirks, like the non-aggregatibility of calculated columns issue I mentioned earlier on, how to resolve self-joins and multiple joins between tables through Aliases and so on.

- Knowledge of dimensional modeling, particularly how to pick a grain for your fact table, how to design a fact table that satisfies the most amount of queries, how to design a set of facts and dimensions that gives you analysis across the business - I spent most of my time on this and it's not specific to OBIEE, it's more to do with dimensional data warehouse design and the best way to read up on this is to read the Kimball books.

- Knowledge of your database platform, so that you know when it's best to transform and calculate data in OBIEE, when it's best to do it in the database, and when you're going beyond what you can do on the fly and you should be using a data mart and an ETL tool such as OWB. Getting this balance right between doing it in OBIEE and doing it in the database is key I believe.

One last thing that I think's worth mentioning before I wrap up is a couple of quirks around OBI SE One, the "cut down" version of OBIEE that's aimed at smaller deployments of 50 users or less. Most people are aware that whilst most of the OBIEE products are in OBI SE One (what you lose is mainly Delivers, for example), a couple of interesting things that are also not included are firstly, the BI Office Plugin, so you can't analyze Answers data in Excel or Powerpoint for example, unless you run it all through BI Publisher and use the BI Publisher add-in instead. The other thing that caught us out was that the version of BI Server that it ships with is 10.1.3.1, which is several releases behind 10.1.3.3.2 that's currently shipping as part of OBIEE - which means that the EVALUATE and EVALUATE_AGGR functions are not present, which we were looking to use to get the Oracle database to perform some analytic functions for us. I'm not sure if license-wise you can update the BI Server element of OBI SE One to the latest release, but it certainly caught us out and pushed us more towards the SQL view solution.

Anyway, that it for me for a week or so, I'm leaving the client team to finish off the model now and start producing some of the reports. When I come back I'll help them fine tune some of the data model and report layouts, then we'll be adding row-level security to the model which we'll do using OBIEE logical table filters rather the Oracle VPD, as their underlying Oracle database is Standard Edition which I don't think supports VPD.