Storing Detail-Level Data in Oracle Exalytics

Although the primary use-case for TimesTen within Oracle Exalytics is to store relatively-small aggregate tables created using the Summary Advisor, many customers have asked us whether it's possible to store their entire reporting dataset in TimesTen. With 1TB of RAM in the original version of the Exalytics hardware, and now 2TB of RAM in the new X3-4 version, even with the requirement to set aside space in RAM for TimesTen's temporary objects, many data warehouses or data marts would fit in 500GB or 1TB of RAM, which makes the idea pretty interesting.

If you've read blogs from us in the past though, you may have picked-up on comments about TimesTen not being a great place to store large tables, as it lacks features such as table partitioning, parallel query and other VLDB essentials we're used to with the Oracle database. Even though TimesTen runs in-memory, there's still a cost to table scans, joins, row lookups and so on, and in some cases this can make querying a TimesTen database actually slower than querying an equivalent Oracle one. So where's the tipping point for this, and is it possible to put an entire data warehouse in-memory, such as the BI Apps data warehouse that Peter Scott talked about in a blog post series a few months ago?

This is actually something we've been looking at fairly intensely over the past few weeks, as we're getting this request from customers looking to maximise the return on their investment in Exalytics. Where it gets particularly interesting is that there's also a second option for storing these large, detail-level datasets in Exalytics - the Essbase Aggregate Storage Option (ASO), an alternative to the more traditional Block Storage Option (BSO) that's capable of storing and analyzing very large, highly dimensional sparse datasets, and with the 11.1.1.7 release of OBIEE 11g comes pre-installed and pre-integrated with OBIEE. So, if a customer wants to store their entire data warehouse or data mart on an Exalytics server, ideally in-memory but whichever way, taking advantage of the high-performance Exalytics hardware and its close integration with Oracle's BI and OLAP software, how do the options work out on a sample dataset?

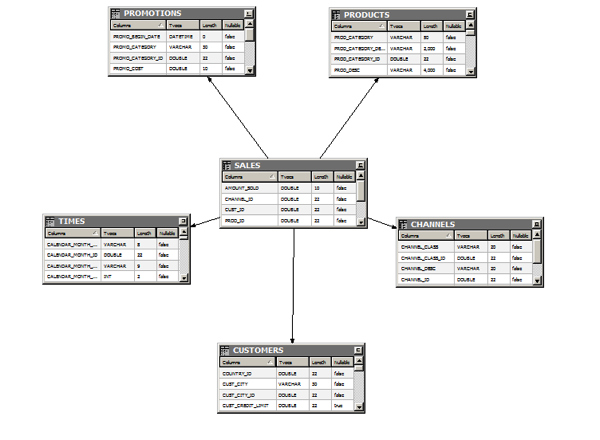

Let's start off with the SH (Sales History) sample dataset that comes with all recent Oracle databases, made up of a couple of fact tables and a set of related dimension tables. To take one of the fact tables, SALES, the table contains just over 900k rows, is partitioned by year, and out-of-the-box has a bunch of materialised views and indexes to support it and speed up user queries. Disabling the MVs for the time being and importing the tables into the BI Repository, my initial physical model looks like this:

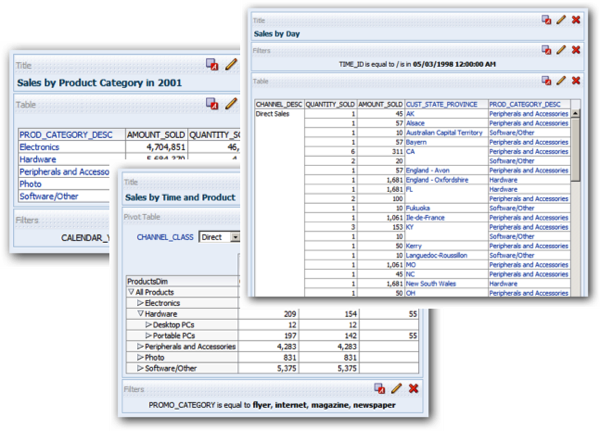

Running a sample set of reports and with caching turned-off, response time even with no MVs and this Oracle source was pretty good, with no noticeable delay - of course this is only a single user on an unused Exalytics server, but on this size of data response time is perfectly acceptable.

Looking at the physical SQL for a typical one of the analyses, you can see hash joins and partition elimination taking place, standard features within an Oracle data warehouse with partitioned tables.

SQL> explain plan for

2 select sum(T43903.QUANTITY_SOLD) as c1,

3 sum(T43903.AMOUNT_SOLD) as c2,

4 T43814.CHANNEL_DESC as c3,

5 T43841.CUST_STATE_PROVINCE as c4,

6 T43866.PROD_CATEGORY_DESC as c5

7 from

8 SH.CHANNELS T43814,

9 SH.PRODUCTS T43866,

10 SH.TIMES T43911,

11 SH.CUSTOMERS T43841,

12 SH.SALES T43903

13 where ( T43814.CHANNEL_ID = T43903.CHANNEL_ID and T43841.CUST_ID = T43903.CUST_ID and T43866.PROD_ID = T43903.PROD_ID a

ID = T43911.TIME_ID and T43903.TIME_ID = TO_DATE('1998-05-03 00:00:00' , 'YYYY-MM-DD HH24:MI:SS') and T43911.TIME_ID = TO_DAT

0:00:00' , 'YYYY-MM-DD HH24:MI:SS') )

14 group by T43814.CHANNEL_DESC, T43841.CUST_STATE_PROVINCE, T43866.PROD_CATEGORY_DESC;

Explained.

SQL> set lines 130

SQL> set pages 0

SQL> select * from table(dbms_xplan.display);

Plan hash value: 3773586640

--------------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | Pstart| Pstop |

----------------------------------------------------------------------------------------

| 1 | HASH GROUP BY | | 2 | 172 | 21 (10)| 00:00:01 | | |

| 2 | NESTED LOOPS | | | | | | | |

| 3 | NESTED LOOPS | | 2 | 172 | 20 (5)| 00:00:01 | | |

| 4 | NESTED LOOPS | | 2 | 140 | 18 (6)| 00:00:01 | | |

| 5 | NESTED LOOPS | | 2 | 98 | 16 (7)| 00:00:01 | | |

|* 6 | HASH JOIN | | 2 | 72 | 14 (8)| 00:00:01 | | |

|* 7 | INDEX UNIQUE SCAN | TIMES_PK | 1 | 8 | 1 (0)| 00:00:01 | | |

| 8 | PARTITION RANGE SINGLE | | 98 | 2744 | 12 (0)| 00:00:01 | 6 | 6 |

| 9 | TABLE ACCESS BY LOCAL INDEX ROWID| SALES | 98 | 2744 | 12 (0)| 00:00:01 | 6 | 6 |

| 10 | BITMAP CONVERSION TO ROWIDS | | | | | | | |

|* 11 | BITMAP INDEX SINGLE VALUE | SALES_TIME_BIX | | | | | 6 | 6 |

| 12 | TABLE ACCESS BY INDEX ROWID | CHANNELS | 1 | 13 | 1 (0)| 00:00:01 | | |

|* 13 | INDEX UNIQUE SCAN | CHANNELS_PK | 1 | | 0 (0)| 00:00:01 | | |

| 14 | TABLE ACCESS BY INDEX ROWID | PRODUCTS | 1 | 21 | 1 (0)| 00:00:01 | | |

|* 15 | INDEX UNIQUE SCAN | PRODUCTS_PK | 1 | | 0 (0)| 00:00:01 | | |

|* 16 | INDEX UNIQUE SCAN | CUSTOMERS_PK | 1 | | 0 (0)| 00:00:01 | | |

| 17 | TABLE ACCESS BY INDEX ROWID | CUSTOMERS | 1 | 16 | 1 (0)| 00:00:01 | | |

-----------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

6 - access("T43903"."TIME_ID"="T43911"."TIME_ID")

7 - access("T43911"."TIME_ID"=TO_DATE(' 1998-05-03 00:00:00', 'syyyy-mm-dd hh24:mi:ss'))

11 - access("T43903"."TIME_ID"=TO_DATE(' 1998-05-03 00:00:00', 'syyyy-mm-dd hh24:mi:ss'))

13 - access("T43814"."CHANNEL_ID"="T43903"."CHANNEL_ID")

15 - access("T43866"."PROD_ID"="T43903"."PROD_ID")

16 - access("T43841"."CUST_ID"="T43903"."CUST_ID")

Let's try now moving those tables, in their entirety, into TimesTen for Exalytics 11.1.2.2.5.0, the version of TimesTen that ships with Exalytics 1.0.0.3. I could use a tool like ODI or GoldenGate to transport the data into the TimesTen database, but instead I'll use a new utility that comes with this release of TimesTen called ttimportfromOracle. which connects TimesTen to an Oracle database, creates TimesTen tables to reflect the Oracle table structures (but using more optimal TimesTen datatypes), and can take advantage of TimesTen for Exalytics' column-based compression, potentially reducing the disk space used when storing table data by unto a factor of 5.

Microsoft Windows [Version 6.1.7601]

Copyright (c) 2009 Microsoft Corporation. All rights reserved.

C:\Users\Administrator>cd c:\temp\tt

c:\TEMP\tt>mkdir tt_sh

c:\TEMP\tt>cd tt_sh

c:\TEMP\tt\tt_sh>C:\TimesTen\tt1122_64_3\support\ttImportFromOracle.exe

-oraConn sh/password@orcl -compression 1 -tables sales promotions products customers channels times

Beginning processing

Resolving any tablename wildcards

Eliminating any duplicate tables

Getting metadata from source

Generating database user list

Assigning TimesTen datatypes

Analyzing source tables (this may take some time)

Analyzing table 'SH.SALES' ...

Analyzing table 'SH.PROMOTIONS' ...

Analyzing table 'SH.PRODUCTS' ...

Analyzing table 'SH.CUSTOMERS' ...

Analyzing table 'SH.CHANNELS' ...

Analyzing table 'SH.TIMES' ...

Estimating compression ratios

Generating output files

Finished processing

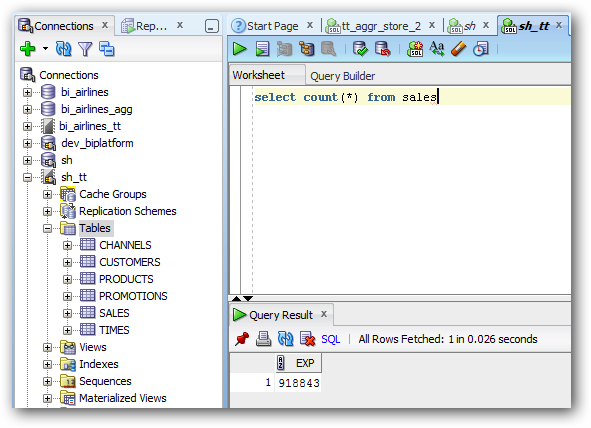

Using the utility gives me a set of scripts for creating a corresponding TimesTen user for the Oracle database user; scripts to create the tables and indexes, load data from Oracle into the TimesTen tables, and then gather stats on the resulting TimesTen database (note that earlier releases of TimesTen don't come with this utility as standard, and the 11.1.2.2.4.x version's utility can't load into compressed TimesTen tables). Using the ttisql command-line interface to the TimesTen server, I therefore run the scripts, and then check-out the resulting tables using SQL*Developer.

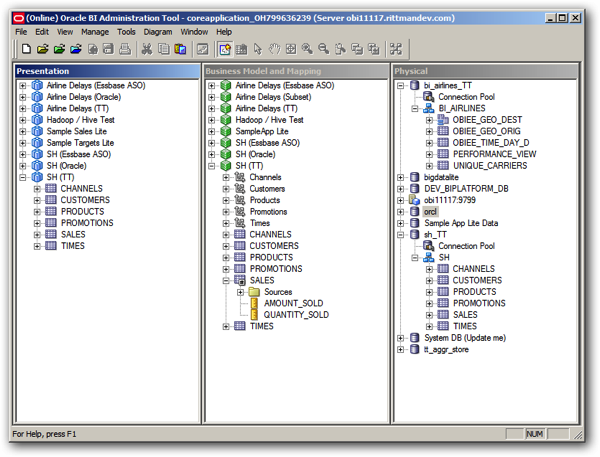

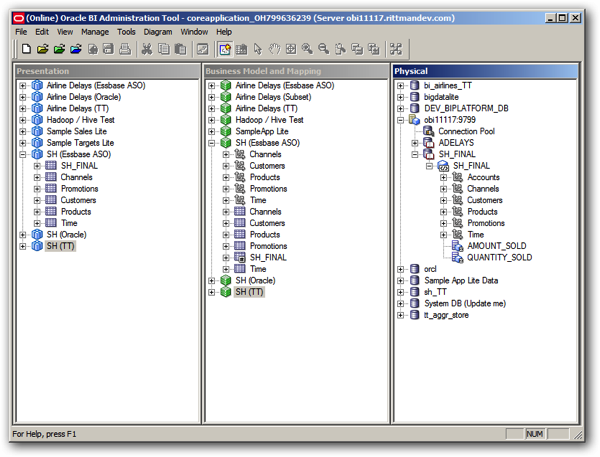

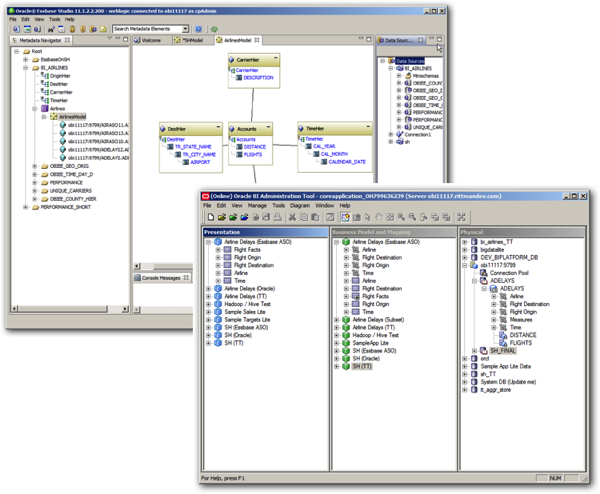

So that's 900K+ rows loaded into TimesTen, using compression, and easily fitting into my 1TB of RAM. Next step is to model the new tables in the RPD, like this:

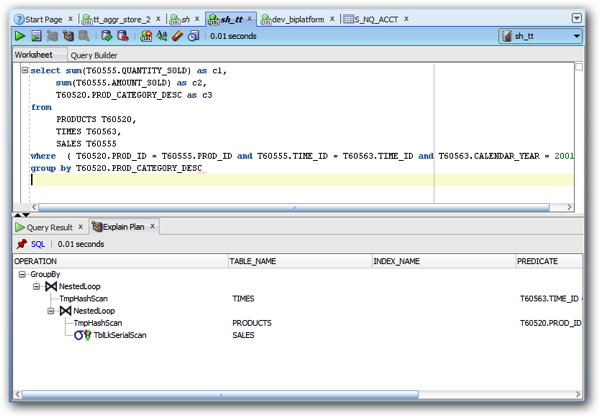

and then create the same set of reports. Running them feels roughly the same as the Oracle ones; maybe ever-so-slightly-slower, but nothing really noticeable. And again - this is on a single user system, whereas TimesTen really comes into itself with lots of concurrent users. Taking the TimesTen SQL query for the analysis equivalent to the Oracle one we explain-planned earlier on, you can see though that there's no partition elimination going on, something that's going to hit TimesTen if we get noticeably bigger tables.

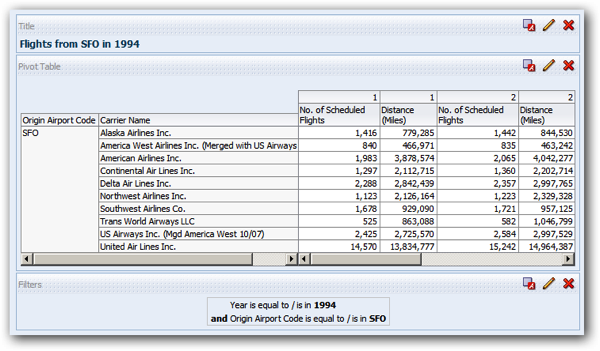

But overall, it's on a par. The Oracle source performs fine, and so does TimesTen. But this is only just under a million rows of data though - what if we loaded a larger dataset, for example the Airline Delays dataset used for Exalytics demos, that has around 130 million rows in the main fact table? Loading the main fact table plus a handful of dimensions into TimesTen using compression takes up just under 8GB of RAM, so we're OK there , but what about response times? Let's start by trying out a few queries against the source Oracle database, on its own. The report below, summarising flights from SFO in 1994 by carrier and quarter, took around 4 seconds to run against my Oracle database source - again, not bad considering it had to sum-up 130m rows of data.

Running the equivalent report against the TimesTen source took about double the time though, about 6 to 8 seconds; not bad considering there's no partitioning or PQ, but there's still indexes, and the dataset is in-memory of course. But it's still 8G of RAM to store it, and it's not "speed of thought", if you know what I mean. So how about storing it in an Essbase ASO database then - how will that work? And how do you go about creating an Essbase ASO database?

UPDATE 1st August 2013: I've since discovered that there was a crucial, post-load step that I didn't perform with these TimesTen tables - running the Index Advisor. See this follow-up blog post on how the Index Advisor brought the TimesTen query response times down dramatically, and the final, concluding post where I discuss my overall findings.

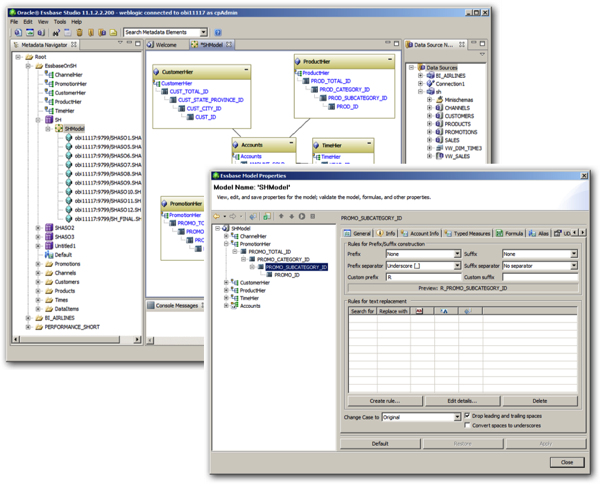

There's actually a number of ways that you could turn a relational star schema into an Essbase ASO database, including the new cube spin-off feature I blogged about the other week, but building the cube automatically assumes that your data is all lined-up correctly, and with Essbase there's lots of ways your source data can trip you up; for example, by default every dimension member ID and alias (description) needs to be unique, not only within a level, or even within a particular dimension, but in fact across all dimensions in the cube (database). Because of this, you often need to pre-process your incoming data to make dimension members unique in this way, and my preferred way to do this is through Essbase Studio, bundled and pre-integrated with Exalytics 1.0.0.3 and OBIEE 11.1.1.7.

The resulting ASO database was only about 24MB in size (just the level-0 members, no aggregate views), and I then brought it into the BI Repository in a similar way to the TimesTen database.

Running reports and analyses though, was a revelation. Whatever query I threw at it, however deep I drilled into the dataset, response times were instant, and that was even without adding any aggregate views (equivalent to materialised views with Oracle. Not a bad start, but what about doing the same with the Airlines dataset, 130m+ rows of flight data across many years and US states?

Well surprisingly, the 130m rows loaded in about a minute or so, and the resulting Essbase ASO database was only around 250MB in size, as opposed to the 8GB required by TimesTen for the same dataset. Even more surprisingly, all the data went in, and response time was either instant, or a second or so - noticeably faster than the TimesTen dataset, or even the Oracle dataset. So why is this, and how can Essbase ASO (which resides on disk) be faster than TimesTen (which runs in-memory)?

There's actually a couple of reasons, and some important caveats too. As to why ASO is so fast - it's designed to be fast, with Hyperion reacting to competition back in the early 2000's from the likes of Microsoft, as well as Oracle with Oracle OLAP's compressed composites, and its designed to store lots of detail-level data, across large numbers of dimensions, and aggregate it quickly. Contrast this with TimesTen, which started-off as an OLTP database and only now is getting the sorts of analytic and VLDB features you'd expect from databases of this type.

But there's some important limitations that you need to be aware of, if you're thinking about moving an entire EBS-style database into Essbase ASO. First of all - you need to know Essbase, and you need to know Essbase's data rule limitations, and you also need to consider what happens when users want to query across multiple facts using conformed dimensions. Now you can do all of that using Essbase and techniques such as cube partitioning, dummy dimensions and so on, but it's not as simple as creating the equivalent TimesTen database tables, or even better, using the Summary Advisor to recommend aggregates based on usage patterns.

But if you're set on caching your entire detail-level dataset into Exalytics, think about using Essbase ASO as your storage engine, as it's designed from the ground-up to quickly analyse and aggregate large, sparse, datasets. For source data around the size of the SH Sales History star schema, there's not much in it, but for datasets of the size of Exalytics' Airline Delays dataset, you'll most probably notice the difference immediately.

Update 29th July 2013: The statement about Exalytics' memory-to-CPU bandwidth being less than it's disk-to-CPU bandwidth was incorrect and was removed. Exalytics' memory bandwidth is actually (max) around 90GB/sec compared to the disk controller's 16-32GB/sec, and should therefore not be an impediment to TimesTen's performance. Apologies for any confusion caused.

Update 1st August 2013: See this blog post where I followed-up the initial tests with some further optimisation of the TimesTen database, and this final post where I further optimised the Oracle, and Essbase ASO data sources, and came up with some final conclusions on how to store detail-level data in Exalytics' memory.