Rittman Mead and Oracle Data Integrator 12c - Thoughts and Experiences So Far

I’m just finishing off my Christmas and New Year leave but tomorrow I’m taking part in a webcast recording with Oracle’s Data Integration product management team, on Rittman Mead’s experiences with ODI12c over the beta program and since general availability. You should be able to see the video when the event takes place on January 14th 2014, but I thought it’d be interesting to note down some of our thoughts, particularly from Jérôme Françoisse who did most of our beta testing in EMEA and wrote two blog posts - here and here - on ODI12c’s new features when the product came out.

As Stewart Bryson said in his blog post “My Journey to ODI12c”, probably the things we liked most about Oracle Warehouse Builder were the flow-based mappings, and the Oracle Database-optimed code it generated. For anybody moving from hand-written SQL and PL/SQL code to a graphical ETL tool, multi-step operator-based mappings weren’t too difficult to understand and they fitted our general approach to building processes out of components and data flows. ODI’s approach of using single source-to-target transformations, together with these strange things called “knowledge modules”, translated well to individual SQL statements but meant more complicated ETL processes had to be developed as lots of separate stages, often using cumbersome features such as temporary interfaces and interface datasets. Of course what we didn’t like about OWB was the complicated (and fragile) process around connectors, deployments, configurations and so forth, but the flow-based editor was the main feature we missed in ODI.

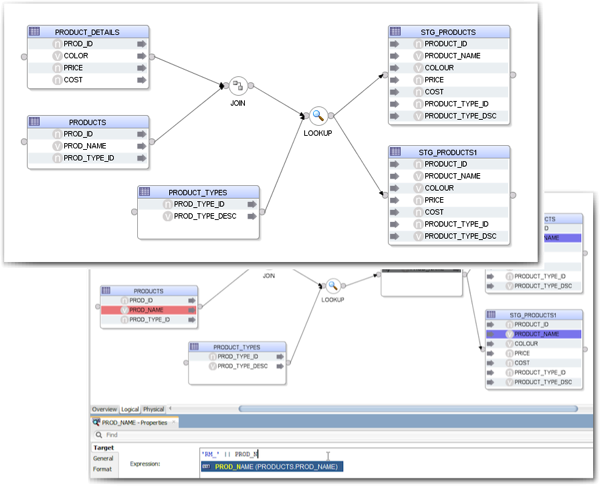

So when ODI12c introduced its own flow-based editor (as well as maintaining compatibility with the older ODI11g way of creating interfaces), we were really pleased. Jerome’s first post on 12c new features covers the new flow-based editor well, and we were also pleased to see net-new features such as in-mapping impact and lineage analysis, as shown in one of the screenshots below.

What this new mapper also gives us is the ability to reproduce almost any SQL query with ODI, giving us more control over the GROUP BY/HAVING aggregation clauses, better PIVOT/UNPIVOT abilities, better DISTINCT capabilities, and better control over set-based operations. As the ODI12c patches come through more operators have been added to the operator pallette, and it’s also nice now to be able to load more than one table at a time, using the multi-table insert feature - though there’s still no “table” component as we had with OWB, where we can define a target table on-the-fly in a mapping and then instantiate it in the database later on.

ODI12c also introduced a few new concepts that aren’t all that obvious when you first take a look at them. The first one we came across was “deployment specifications” - as Jerome said in his article, what these allow you to do is have more than one physical specification for your mapping (the new 12c word for interfaces), with one using an incremental load KM, for example, whilst the other using a bulk-load one. This is all about reusability, and simplifying your ETL code base - the one logical mapping from source systems to target drives both the initial data load, which might use SQL*Loader or another technology, and then can be used to do the incremental loads afterwards, without having to maintain two separate mappings and risk “code drift”.

On the subject of reusability, OWB for a while has had the concept of reusable mappings, and now ODI12c does. Similar in concept to procedures and packages in PL/SQL, reusable mappings provide an input and output and allow you to define a common process, which can then be dropped into another mapping. In practice we didn’t see these used much in OWB, so we’re not sure what the ODI12c take-up will be like, but this is what 11g temporary interfaces turn into when you upgrade to 12c, so you’ll certainly see them used in your projects.

ODI12c also introduces something called “component KMs”. Up until now, knowledge modules have effectively been scripts, using a substitution API to pull in table names, sources and so on and then running as “interpreted” code to move data around your system. Component KMs in contrast are “black box”, compiled integration pieces, seemingly brought over as part of the OWB integration piece that use the same approach (we assume) that OWB used for generating ETL code, and presumably were introduced to support migrations from OWB. We’re not sure where this one is going - one of the best features of ODI is the open nature of the standard knowledge modules so we hope that feature doesn’t get lost, but my take is that this is to support OWB migration use-cases though it might be a way of introducing more specialised integration features in the future.

(Update: see the comment from David Allen below where he explains a bit more about why component KMs were introduced).

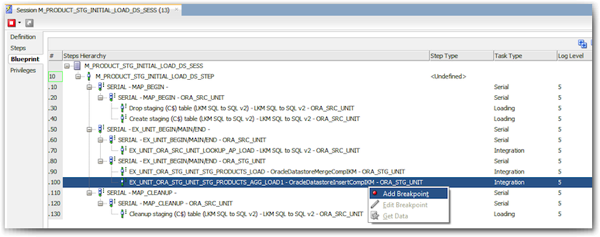

Other stuff that interested us in the new release included the debugger that’s now within ODI12c Studio, as shown in the screenshot below. Our view is that this feature is a bit of a “work in progress”, but it’s handy to be able to set breakpoints and query uncommitted data from the target database using the agent within Studio.

Another very useful feature that’s potentially a bit obscure though, is “blueprints”. This is really to address high-volume/velocity ODI use-cases where the actual process of retrieving process steps from the ODI repository, and logging of step results, slows the ETL process down and creates a bottleneck. With session blueprints, the steps are instead cached on each agent, and ODI only logs information relevant to the log level, rather than logging everything then removing the bits that aren’t relevant for lesser logging.

Obviously there’s lots more in terms of new features we were impressed with, but other notable ones were change notification when opening a mapping where its underlying data stores had changed; in-session parallelism so steps within a KM could run in-parallel, if there were no dependencies or requirements for serialisation, and a more consistent setup and management process that used concepts from WebLogic and Fusion Middleware - though install itself was a bit less flexible as we can’t select which components we want to install; It's either standalone (only for standalone agent) or Enterprise (with JEE agent, ODI Studio, SDK, …), which means that servers running headless have an un-needed install of ODI Studio, whilst developers have JEE components they don’t need in their install folders. Stewart raves about the new Fusion Middleware/WLST-based component management though, along with the new Data Integration Management Pack for Enterprise Manager 12c that got released just after 12c, so I’m looking forward to putting these things through their paces in the near future once the Christmas break is over.

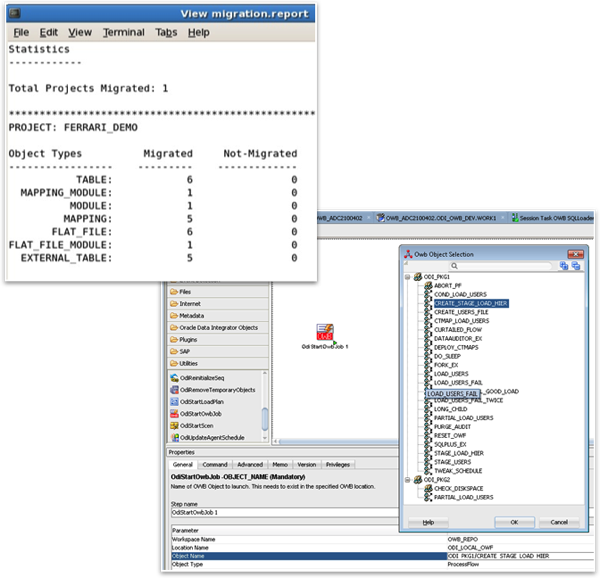

Now one thing that Jerome didn’t cover in his initial posts, as the feature came out via a patch after ODI12c first came out, is the OWB to ODI migration utility. This is actually the second part of the OWB-to-ODI story, as 12c also included a feature where ODI can run OWB processes from within an ODI package, as detailed in this blog post from Oracle that also talks about the migration utility and two of who’s screenshots I’ve used below (command-line migration utility on the left, selecting OWB objects to run “in place” from within ODI on the right).

This means that you’ve effectively got two ways that you can work with OWB processes in ODI - you can run them “in-place” from within ODI, something you’d probably do if the routine works well and there’s no point converting it to ODI, or you can migrate them into ODI, converting them to ODI mappings and then running them as normal ODI processes. Not everything comes across at this point - OWB process flows are the most visible part that’s missing, and according to Stewart who’s just finishing up an article for OTN on the topic, there are still bits you need to complete manually after a migration, but all of the business logic for the mapping comes through, which is what you’re really after.

There’s still a few pieces we’ve not really had the chance to look at closely - better integration with GoldenGate is one of the standout pieces in this area, and as I mentioned before the new EM Management Pack for Data Integration sounds like it’ll be a good complement to the database and BI ones, with in Stewart’s words “complete drill-through from Database to ODI and back again”. More from us on this and other data integration-related topics as the new year unfolds.