Taking a Look at the New Oracle Big Data SQL

Oracle launched their Oracle Big Data SQL product earlier this week, and it’ll be of interest to anyone who saw our series of posts a few weeks ago about the updated Oracle Information Management Reference Architecture, where Hadoop now sits alongside traditional Oracle data warehouses to provide what’s termed a “data reservoir”. In this type of architecture, Hadoop and its underlying technologies HDFS, Hive and schema-on-read databases provide an extension to the more structured relational Oracle data warehouses, making it possible to store and analyse much larger sets of data with much more diverse data types and structures; the issue that customers face when trying to implement this architecture is that Hadoop is a bit of a “wild west” in terms of data access methods, security and metadata, making it difficult for enterprises to come up with a consistent, over-arching data strategy that works for both types of data store.

Oracle Big Data SQL attempts to address this issue by providing a SQL access layer over Hadoop, managed by the Oracle database and integrated in with the regular SQL engine within the database. Where it differs from SQL on Hadoop technologies such as Apache Hive and Cloudera Impala is that there’s a single unified data dictionary, single Oracle SQL dialect and the full management capabilities of the Oracle database over both sources, giving you the ability to define access controls over both sources, use full Oracle SQL (including analytic functions, complex joins and the like) without having to drop down into HiveQL or other Hadoop SQL dialects. Those of you who follow the blog or work with Oracle’s big data connector products probably know of a couple of current technologies that sound like this; Oracle Loader for Hadoop (OLH) is a bulk-unloader for Hadoop that copies Hive or HDFS data into an Oracle database typically faster than a tool like Sqoop, whilst Oracle Direct Connector for HDFS (ODCH) gives the database the ability to define external tables over Hive or HDFS data, and then query that data using regular Oracle SQL.

Where ODCH falls short is that it treats the HDFS and Hive data as a single stream, making it easy to read once but, like regular external tables, slow to access frequently as there’s no ability to define indexes over the Hadoop data; OLH is also good but you can only use it to bulk-load data into Oracle, you can’t use it to query data in-place. Oracle Big Data SQL uses an approach similar to ODCH but crucially, it uses some Exadata concepts to move processing down to the Hadoop cluster, just as Exadata moves processing down to the Exadata storage cells (so much so that the project was called “Project Exadoop” internally within Oracle up to the launch) - but also meaning that it's Exadata only, and not available for Oracle Databases running on non-Exadata hardware.

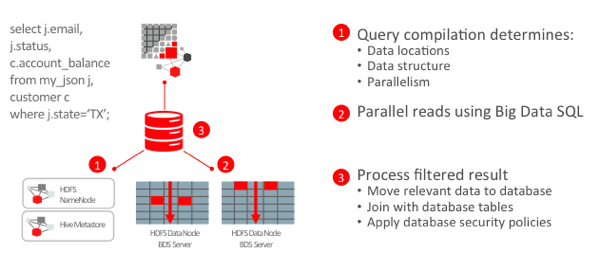

As explained by the launch blog post by Oracle’s Dan McClary, Oracle Big Data SQL includes components that install on the Hadoop cluster nodes that provide the same “SmartScan” functionality that Exadata uses to reduce network traffic between storage servers and compute servers. In the case of Big Data SQL, this SmartScan functionality retrieves just the columns of data requested in the query (a process referred to as “column projection”), and also only sends back those rows that are requested by the query predicate.

Combined with Hive’s ability to map unstructured data sources into regular columns and tables, and Big Data SQL’s support for Oracle NoSQL database, the promise of this new technology is the ability to run queries against both relational, Hadoop and NoSQL data sources using a common data dictionary and common set of identity and data access controls.

There’s a couple of potential downsides, though. First-off, Big Data SQL will only be available as part of Oracle Big Data Appliance, which though an impressive bit of hardware and software is a much smaller market than the total set of Oracle customers looking to combine relational and Hadoop-based data; it’s also restricted to Oracle 12c on Exadata meaning you'll most probably need to do a database upgrade even if you've already got the required Exadata servers in-place. Finally, it's also restricted to the Oracle-specific distribution of Cloudera Hadoop, though if you’re using the BDA you’ll be using this anyway.

My other concern though is that Oracle now focus on SQL as their only access mechanism into Hadoop and big data, in a similar way to how they focused on SQL as their access route into OLAP when they incorporated Oracle Express into the Oracle Database, back in the mid-2000’s. Focusing on SQL over multidimensional languages such as Express 4GL and MDX meant you missed the real point of using a multidimensional, OLAP database - which of course was being able to use a multidimensional query language, and my concern with Big Data SQL is that we’ll end up focusing on that rather than languages such as Spark, Pig and NoSQL query languages which, combined with schema-on-read, is the real differentiator for Hadoop-based systems. As long as Big Data SQL is positioned as a “bonus” - a convenient way of getting data out of Hadoop once it’s been processed and analysed using more Hadoop-native technologies - then Big Data SQL will be a great enabling and acceptance technology for enterprises, rather than one that ends up restricting them.

We’re not aware of any beta program and I don’t think the launch webcast mentioned a specific date or BDA version when Big Data SQL will be out, but with Openworld coming up soon I’d expect to hear more about this over the next few months. We’re involved in a couple of significant Oracle Big Data Appliance implementations at the moment and this product would address a real, pressing need at the moment with our customers, so I’m looking forward to getting more involved in it over the next few months.

This article was updated on 18th July to add the fact that Big Data SQL is only available on Exadata, and is not a generic Oracle Database 12c technology.