Using Oracle GoldenGate for Trickle-Feeding RDBMS Transactions into Hive and HDFS

A few months ago I wrote a post on the blog around using Apache Flume to trickle-feed log data into HDFS and Hive, using the Rittman Mead website as the source for the log entries. Flume is a good technology to use for this type of capture requirement as it captures log entries, HTTP calls, JMS queue entries and other “event” sources easily, has a resilient architecture and integrates well with HDFS and Hive. But what if the source you want to capture activity for is a relational database, for example Oracle Database 12c? With Flume you’d need to spool the database transactions to file, whereas what you really want is a way to directly connect to the database engine and capture the changes from source.

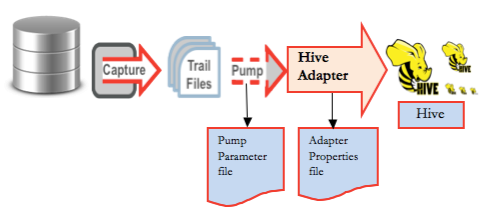

Which is exactly what Oracle GoldenGate does, and what most people don’t realise is that GoldenGate can also load data into HDFS and Hive, as well as the usual database targets. Hive and HDFS aren’t fully-supported targets yet, you can use the Oracle GoldenGate for Java adapter to act as the handler process and then land the data in HDFS files or Hive tables on your target Hadoop platform. My Oracle Support has two tech nodes, "Integrating OGG Adapter with Hive (Doc ID 1586188.1)” and "Integrating OGG Adapter with HDFS (Doc ID 1586210.1)” that give example implementations of the Java adapters you’d need for these two target types, with the overall end-to-end process for landing Hive data looking like the diagram below (and the HDFS one just swapping out HDFS for Hive at the handler adapter stage)

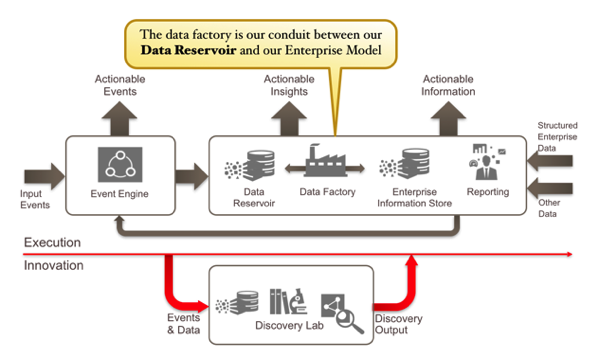

This is also a good example of the sorts of technology we’d use to implement the “data factory” concept within the new Oracle Information Management Reference Architecture, the part of the architecture that moves data between the Hadoop and NoSQL-based Data Reservoir, and the relationally-stored enterprise information store; in this case, trickle-feeding transactional data from the Oracle database into Hadoop, perhaps to archive it at lower-cost than we could do in an Oracle database, or to add transaction activity data to a Hadoop-based application

So I asked my colleague Nelio Guimaraes to set up a GoldenGate capture process on our Cloudera CDH5.1 Hadoop cluster, using GoldenGate 12.1.2.0.0 for our source Oracle 11gR2 database and Oracle GoldenGate for Java, downloadable separately on edelivery.oracle.com under Oracle Fusion Middleware > Oracle GoldenGate Application Adapters 11.2.1.0.0 for JMS and Flat File Media Pack. In our example, we’re going to capture activity on the SCOTT.EMP table in the Oracle database, and then perform the following step to set up replication from it into a replica Hive table:

- Create a table in Hive that corresponds to the table in Oracle database.

- Create a table in the Oracle database and prepare the table for replication.

- Configure the Oracle GoldenGate Capture to extract transactions from the Oracle database and create the trail file.

- Configure the Oracle GoldenGate Pump to read the trail and invoke the custom adapter

- Configure the property file for the Hive handler

- Code, Compile and package the custom Hive handler

- Execute a test.

Setting up the Oracle Database Source Capture

Let’s go into the Oracle database first, check the table definition, and then connect to Hadoop to create a Hive table of the same column definition.

[oracle@centraldb11gr2 ~]$ sqlplus scott/tiger SQL*Plus: Release 11.2.0.3.0 Production on Thu Sep 11 01:08:49 2014 Copyright (c) 1982, 2011, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production With the Partitioning, Oracle Label Security, OLAP, Data Mining, Oracle Database Vault and Real Application Testing options SQL> describe DEPT Name Null? Type ----------------------------------------- -------- ---------------------------- DEPTNO NOT NULL NUMBER(2) DNAME VARCHAR2(14) LOC VARCHAR2(13) SQL> exit ... [oracle@centraldb11gr2 ~]$ ssh oracle@cdh51-node1 Last login: Sun Sep 7 16:11:36 2014 from officeimac.rittmandev.com [oracle@cdh51-node1 ~]$ hive ... create external table dept ( DEPTNO string, DNAME string, LOC string ) row format delimited fields terminated by '\;' stored as textfile location '/user/hive/warehouse/department'; exit ...

Then I install Oracle Golden Gate 12.1.2 on the source Oracle database, just as you’d do for any Golden Gate install, and make sure supplemental logging is enabled for the table I’m looking to capture. Then I go into the ggsci Golden Gate command-line utility, to first register the user it’ll be connecting as, and what table it needs to capture activity for.

[oracle@centraldb11gr2 12.1.2]$ cd /u01/app/oracle/product/ggs/12.1.2/ [oracle@centraldb11gr2 12.1.2]$ ./ggsci $ggsci> DBLOGIN USERID sys@ctrl11g, PASSWORD password sysdba $ggsci> ADD TRANDATA SCOTT.DEPT COLS(DEPTNO), NOKEY

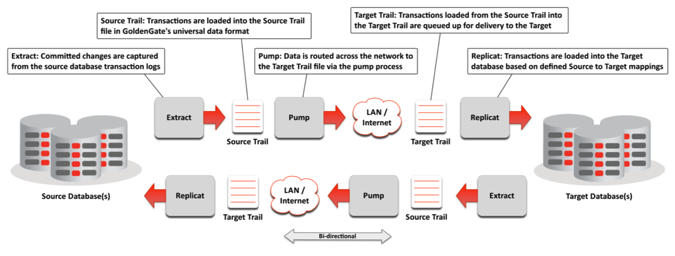

GoldenGate uses a number of components to replicate data from source to targets, as shown in the diagram below.

For our purposes, though, there are just three that we need to configure; the Extract component, which captures table activity on the source; the Pump process that moves data (or the “trail”) from source database to the Hadoop cluster; and the Replicat component that takes that activity and applies it to the target tables. In our example, the extract and pump processes will be as normal, but we need to create a custom “handler” for the target Hive table that uses the Golden Gate Java API and the Hadoop FS Java API.

For our purposes, though, there are just three that we need to configure; the Extract component, which captures table activity on the source; the Pump process that moves data (or the “trail”) from source database to the Hadoop cluster; and the Replicat component that takes that activity and applies it to the target tables. In our example, the extract and pump processes will be as normal, but we need to create a custom “handler” for the target Hive table that uses the Golden Gate Java API and the Hadoop FS Java API.

The tool we use to set up the extract and capture process is ggsci, the command-line Golden Gate Software Command Interface. I’ll use it first to set up the Manager process that runs on both source and target servers, giving it a port number and connection details into the source Oracle database.

$ggsci> edit params mgr PORT 7809 USERID sys@ctrl11g, PASSWORD password sysdba PURGEOLDEXTRACTS /u01/app/oracle/product/ggs/12.1.2/dirdat/*, USECHECKPOINTS

Then I create two configuration files, one for the extract process and one for the pump process, and then use those to start those two processes.

$ggsci> edit params ehive EXTRACT ehive USERID sys@ctrl11g, PASSWORD password sysdba EXTTRAIL /u01/app/oracle/product/ggs/12.1.2/dirdat/et, FORMAT RELEASE 11.2 TABLE SCOTT.DEPT; $ggsci> edit params phive EXTRACT phive RMTHOST cdh51-node1.rittmandev.com, MGRPORT 7809 RMTTRAIL /u01/app/oracle/product/ggs/11.2.1/dirdat/rt, FORMAT RELEASE 11.2 PASSTHRU TABLE SCOTT.DEPT; $ggsci> ADD EXTRACT ehive, TRANLOG, BEGIN NOW $ggsci> ADD EXTTRAIL /u01/app/oracle/product/ggs/12.1.2/dirdat/et, EXTRACT ehive $ggsci> ADD EXTRACT phive, EXTTRAILSOURCE /u01/app/oracle/product/ggs/12.1.2/dirdat/et $ggsci> ADD RMTTRAIL /u01/app/oracle/product/ggs/11.2.1/dirdat/rt, EXTRACT phive

As the Java event handler on the target Hadoop platform won’t be able to ordinarily get table metadata for the source Oracle database, we’ll use the defgen utility on the source platform to create the parameter file that the replicat process will need.

$ggsci> edit params dept defsfile ./dirsql/DEPT.sql USERID ggsrc@ctrl11g, PASSWORD ggsrc TABLE SCOTT.DEPT; ./defgen paramfile ./dirprm/dept.prm NOEXTATTR

Note that NOEXTATTR means no extra attributes; because the version on target is a generic and minimal version, the definition file with extra attributes won't be interpreted. Then, this DEPT.sql file will need to be copied across to the target Hadoop platform where you’ve installed Oracle GoldenGate for Java, to the /dirsql folder within the GoldenGate install.

[oracle@centraldb11gr2 12.1.2]$ ssh oracle@cdh51-node1 oracle@cdh51-node1's password: Last login: Wed Sep 10 17:05:49 2014 from centraldb11gr2.rittmandev.com [oracle@cdh51-node1 ~]$ cd /u01/app/oracle/product/ggs/11.2.1/ [oracle@cdh51-node1 11.2.1] $ pwd/u01/app/oracle/product/ggs/11.2.1 [oracle@cdh51-node1 11.2.1]$ ls dirsql/ DEPT.sql

Then, going back to the source Oracle database platform, we’ll start the Golden Gate Monitor process, and then the extract and pump processes.

[oracle@cdh51-node1 11.2.1]$ ssh oracle@centraldb11gr2 oracle@centraldb11gr2's password: Last login: Thu Sep 11 01:08:18 2014 from bdanode1.rittmandev.com GGSCI (centraldb11gr2.rittmandev.com) 7> start mgr Manager started. GGSCI (centraldb11gr2.rittmandev.com) 8> start ehive Sending START request to MANAGER ... EXTRACT EHIVE starting GGSCI (centraldb11gr2.rittmandev.com) 9> start phive Sending START request to MANAGER ... EXTRACT PHIVE starting

Setting up the Hadoop / Hive Replicat Process

Setting up the Hadoop side involves a couple of similar steps to the source capture side; first we configure the parameters for the Manager process, then configure the extract process that will pull table activity off of the trail file, sent over by the pump process on the source Oracle database.

[oracle@centraldb11gr2 12.1.2]$ ssh oracle@cdh51-node1 oracle@cdh51-node1's password: Last login: Wed Sep 10 21:09:38 2014 from centraldb11gr2.rittmandev.com [oracle@cdh51-node1 ~]$ cd /u01/app/oracle/product/ggs/11.2.1/ [oracle@cdh51-node1 11.2.1]$ ./ggsci $ggsci> edit params mgr PORT 7809 PURGEOLDEXTRACTS /u01/app/oracle/product/ggs/11.2.1/dirdat/*, usecheckpoints, minkeepdays 3 $ggsci> add extract tphive, exttrailsource /u01/app/oracle/product/ggs/11.2.1/dirdat/rt $ggsci> edit params tphive EXTRACT tphive SOURCEDEFS ./dirsql/DEPT.sql CUserExit ./libggjava_ue.so CUSEREXIT PassThru IncludeUpdateBefores GETUPDATEBEFORES TABLE SCOTT.DEPT;

Now it’s time to create the Java hander that will write the trail data to the HDFS files and Hive table. The My Oracle Support Doc.ID 1586188.1 I mentioned at the start of the article has a sample Java program called SampleHandlerHive.java that writes incoming transactions into an HDFS file within the Hive directory, and also writes it to a file on the local filesystem. To get this working on our Hadoop system, we created a new java source code file from the content in SampleHandlerHive.java, updated the path from hadoopConf.addResource to point the the correct location of core-site.xml, hdfs-site.xml and mapred-site.xml, and then compiled it as follows:

export CLASSPATH=/u01/app/oracle/product/ggs/11.2.1/ggjava/ggjava.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/client/* javac -d . SampleHandlerHive.java

Successfully executing the above command created the SampleHiveHandler.class under /u01/app/oracle/product/ggs/11.2.1//dirprm/com/mycompany/bigdata. To create the JAR file that the GoldenGate for Java adapter will need, I then need to change directory to the /dirprm directory under the Golden Gate install, and then run the following commands:

jar cvf myhivehandler.jar com chmod 755 myhivehandler.jar

I also need to create a properties file for this JAR to use, in the same /dirprm directory. This properties file amongst other things tells the Golden Gate for Java adapter where in HDFS to write the data to (the location where the Hive table keeps its data files), and also references any other JAR files from the Hadoop distribution that it’ll need to get access to.

[oracle@cdh51-node1 dirprm]$ cat tphive.properties

#Adapter Logging parameters.

gg.log=log4j

gg.log.level=info

#Adapter Check pointing parameters

goldengate.userexit.chkptprefix=HIVECHKP_

goldengate.userexit.nochkpt=true

# Java User Exit Property

goldengate.userexit.writers=jvm

jvm.bootoptions=-Xms64m -Xmx512M -Djava.class.path=/u01/app/oracle/product/ggs/11.2.1/ggjava/ggjava.jar:/u01/app/oracle/product/ggs/11.2.1/dirprm:/u01/app/oracle/product/ggs/11.2.1/dirprm/myhivehandler.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/client/hadoop-common-2.3.0-cdh5.1.0.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/lib/commons-configuration-1.6.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/lib/commons-logging-1.1.3.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/lib/commons-lang-2.6.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/etc/hadoop:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/etc/hadoop/conf.dist:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/lib/guava-11.0.2.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/hadoop-auth-2.3.0-cdh5.1.0.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/client/hadoop-hdfs-2.3.0-cdh5.1.0.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/client/commons-cli-1.2.jar:/opt/cloudera/parcels/CDH-5.1.0-1.cdh5.1.0.p0.53/lib/hadoop/client/protobuf-java-2.5.0.jar

#Properties for reporting statistics

# Minimum number of {records, seconds} before generating a report

jvm.stats.time=3600

jvm.stats.numrecs=5000

jvm.stats.display=TRUE

jvm.stats.full=TRUE

#Hive Handler.

gg.handlerlist=hivehandler

gg.handler.hivehandler.type=com.mycompany.bigdata.SampleHandlerHive

gg.handler.hivehandler.HDFSFileName=/user/hive/warehouse/department/dep_data

gg.handler.hivehandler.RegularFileName=cinfo_hive.txt

gg.handler.hivehandler.RecordDelimiter=;

gg.handler.hivehandler.mode=tx

Now, the final step on the Hadoop side is to start its Golden Gate Manager process, and then start the Replicat and apply process.

GGSCI (cdh51-node1.rittmandev.com) 5> start mgr Manager started. GGSCI (cdh51-node1.rittmandev.com) 6> start tphive Sending START request to MANAGER ... EXTRACT TPHIVE starting

Testing it All Out

So now I’ve got the extract and pump processes running on the Oracle Database side, and the apply process running on the Hadoop side, let’s do a quick test and see if it’s working. I’ll start by looking at what data is in each table at the beginning.

SQL> select * from dept;

DEPTNO DNAME LOC

---------- -------------- -------------

10 ACCOUNTING NEW YORK

20 RESEARCH DALLAS

30 SALES CHICAGO

40 OPERATIONS BOSTON

50 TESTE PORTO

60 NELIO STS

70 RAQUEL AVES

7 rows selected.

Over on the Hadoop side, there’s just one row in the Hive table:

hive> select * from customer; OK 80MARCIA ST

Now I’ll go back to Oracle and insert a new row in the DEPT table:

SQL> insert into dept (deptno, dname, loc) 2 values (75, 'EXEC','BRIGHTON'); 1 row created. SQL> commit; Commit complete.

And, going back over to Hadoop, I can see Golden Gate has added that record to the Hive table, by the Golden Gate for Java adapter writing the transaction to the underlying HDFS file.

hive> select * from customer; OK 80MARCIA ST 75 EXEC BRIGHTON

So there you have it; Golden Gate replicating Oracle RBDMS transactions into HDFS and Hive, to complement Apache Flume’s ability to replicate log and event data into Hadoop. Moreover, as Michael Rainey explained in this three part blog series, Golden Gate is closely integrated into the new 12c release of Oracle Data Integrator, making it even easier to manage Golden Gate replication processes into your overall data loading project, and giving Hadoop developers and Golden Gate users access to the full set of load orchestration and data quality features in that product rather than having to rely on home-grown scripting, or Oozie.