Adding Oracle Big Data SQL to ODI12c to Enhance Hive Data Transformations

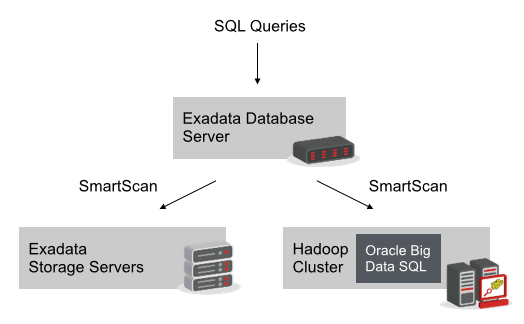

An updated version of the Oracle BigDataLite VM came out a couple of weeks ago, and as well as updating the core Cloudera CDH software to the latest release it also included Oracle Big Data SQL, the SQL access layer over Hadoop that I covered on the blog a few months ago (here and here). Big Data SQL takes the SmartScan technology from Exadata and extends it to Hadoop, presenting Hive tables and HDFS files as Oracle external tables and pushing down the filtering and column-selection of data to individual Hadoop nodes. Any table registered in the Hive metastore can be exposed as an external table in Oracle, and a BigDataSQL agent installed on each Hadoop node gives them the ability to understand full Oracle SQL syntax rather than the cut-down SQL dialect that you get with Hive.

There’s two immediate use-cases that come to mind when you think about Big Data SQL in the context of BI and data warehousing; you can use Big Data SQL to include Hive tables in regular Oracle set-based ETL transformations, giving you the ability to reference Hive data during part of your data load; and you can also use Big Data SQL as a way to access Hive tables from OBIEE, rather than having to go through Hive or Impala ODBC drivers. Let’s start off in this post by looking at the ETL scenario using ODI12c as the data integration environment, and I’ll come back to the BI example later in the week.

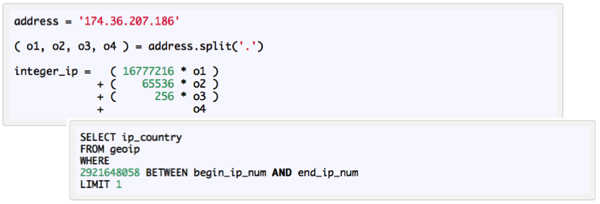

You may recall in a couple of earlier posts earlier in the year on ETL and data integration on Hadoop, I looked at a scenario where I wanted to geo-code web server log transactions using an IP address range lookup file from a company called MaxMind. To determine the country for a given IP address you need to locate the IP address of interest within ranges listed in the lookup file, something that’s easy to do with a full SQL dialect such as that provided by Oracle:

In my case, I’d want to join my Hive table of server log entries with a Hive table containing the IP address ranges, using the BETWEEN operator - except that Hive doesn’t support any type of join other than an equi-join. You can use Impala and a BETWEEN clause there, but in my testing anything other than a relatively small log file Hive table took massive amounts of memory to do the join as Impala works in-memory which effectively ruled-out doing the geo-lookup set-based. I then went on to do the lookup using Pig and a Python API into the geocoding database but then you’ve got to learn Pig, and I finally came up with my best solution using Hive streaming and a Python script that called that same API, but each of these are fairly involved and require a bit of skill and experience from the developer.

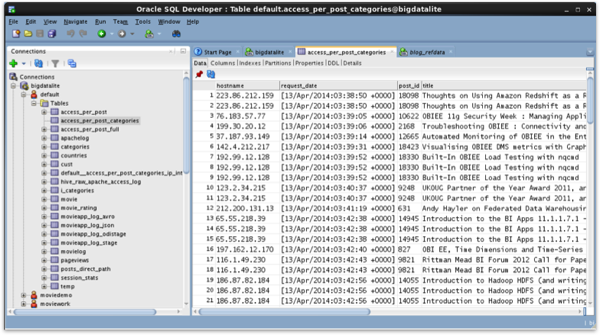

But this of course is where Big Data SQL could be useful. If I could expose the Hive table containing my log file entries as an Oracle external table and then join that within Oracle to an Oracle-native lookup table, I could do my join using the BETWEEN operator and then output the join results to a temporary Oracle table; once that’s done I could then use ODI12c’s sqoop functionality to copy the results back down to Hive for the rest of the ETL process. Looking at my Hive database using SQL*Developer 4.0.3’s new ability to work with Hive tables I can see the table I’m interested in listed there:

and I can also see it listed in the DBA_HIVE_TABLES static view that comes with Big Data SQL on Oracle Database 12c:

SQL> select database_name, table_name, location

2 from dba_hive_tables

3 where table_name like 'access_per_post%';

DATABASE_N TABLE_NAME LOCATION

---------- ------------------------------ --------------------------------------------------

default access_per_post hdfs://bigdatalite.localdomain:8020/user/hive/ware

house/access_per_post

default access_per_post_categories hdfs://bigdatalite.localdomain:8020/user/hive/ware

house/access_per_post_categories

default access_per_post_full hdfs://bigdatalite.localdomain:8020/user/hive/ware

house/access_per_post_full

There are various ways to create the Oracle external tables over Hive tables in the linked Hadoop cluster, including using the new DBMS_HADOOP package to create the Oracle DDL from the Hive metastore table definitions or using SQL*Developer Data Modeler to generate the DDL from modelled Hive tables, but if you know the Hive table definition and its not too complicated, you might as well just write the DDL statement yourself using the new ORACLE_HIVE external table access driver. In my case, to create the corresponding external table for the Hive table I want to geo-code, it looks like this:

CREATE TABLE access_per_post_categories( hostname varchar2(100), request_date varchar2(100), post_id varchar2(10), title varchar2(200), author varchar2(100), category varchar2(100), ip_integer number) organization external (type oracle_hive default directory default_dir access parameters(com.oracle.bigdata.tablename=default.access_per_post_categories));

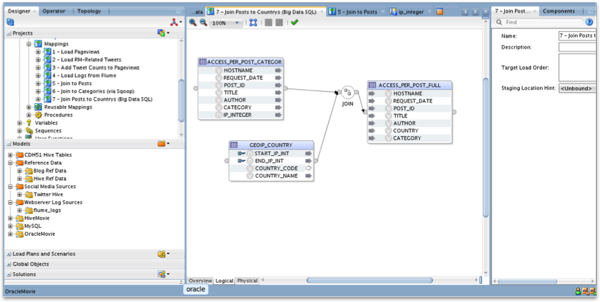

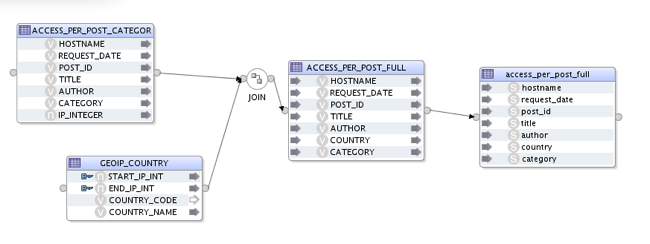

Then it’s just a case of importing the metadata for the external table over Hive, and the tables I’m going to join to and then load the results into, into ODI’s repository and then create a mapping to bring them all together.

Importantly, I can create the join between the tables using the BETWEEN clause, something I just couldn’t do when working with Hive tables on their own.

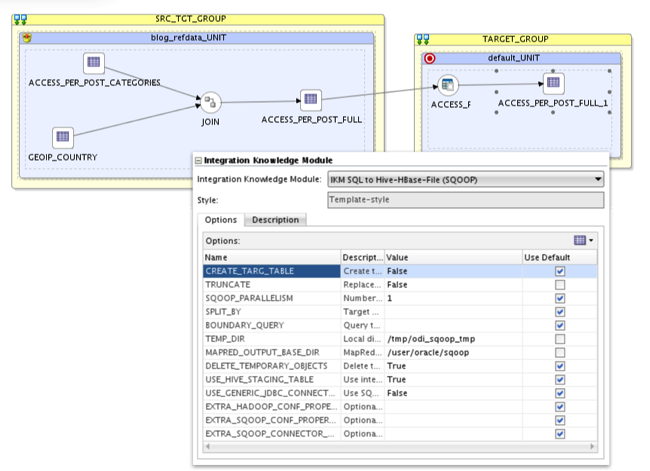

Running the mapping then joins the webserver log lookup table to the geocoding IP address range lookup table through the Oracle SQL engine, removing all the complexity of using Hive streaming, Pig or the other workaround solutions I used before. What I can then do is add a further step to the mapping to take the output of my join and use that to load the results back into Hive, like this:

I’ll then use IKM SQL to to Hive-HBase-File (SQOOP) knowledge module to set up the export from Oracle into Hive.

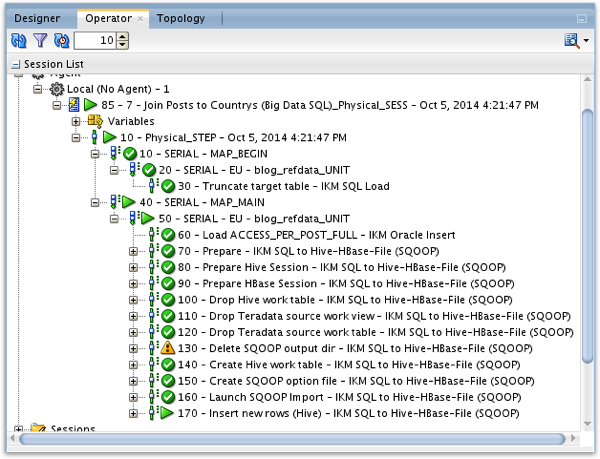

Now, when I run the mapping I can see the initial table join taking place between the Oracle native table and the Hive-sourced external table, and the results then being exported back into Hadoop at the end using the Sqoop KM.

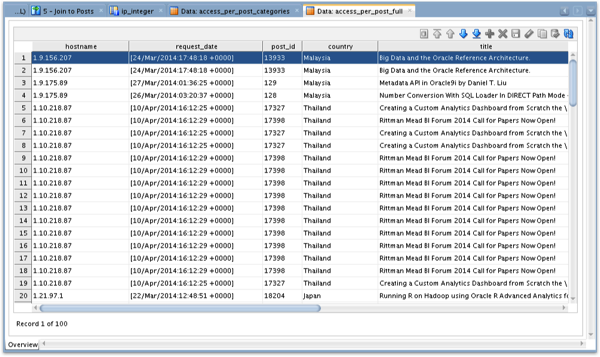

Finally, I can view the contents of the downstream Hive table loaded via Sqoop, and see that it does in-fact contain the country name for each of the page accesses.

Oracle Big Data SQL isn’t a solution suitable for everyone; it only runs on the BDA and requires Exadata for the database access, and it’s an additional license cost on top of the base BDA software bundle. But if you’ve got it available it’s an excellent way to blend Hive and Oracle data, and a great way around some of the restrictions around HiveQL and the Hive JDBC/ODBC drivers. More on this topic later next week, when I’ll look at using Big Data SQL in conjunction with OBIEE 11g.