OBIEE and ODI on Hadoop : Next-Generation Initiatives To Improve Hive Performance

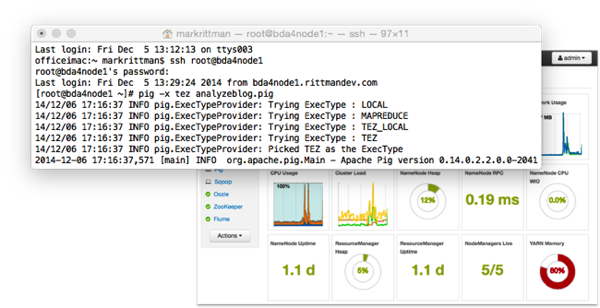

The other week I posted a three-part series (part 1, part 2 and part 3) on going beyond MapReduce for Hadoop-based ETL, where I looked at a typical Apache Pig dataflow-style ETL process and showed how Apache Tez and Apache Spark can potentially make these processes run faster and make better use of in-memory processing. I picked Pig as a data processing environment as the multi-step data transformations creates translate into lots of separate MapReduce jobs in traditional Hadoop ETL environments, but run as a single DAG (directed acyclic graph) under Tez and Spark and can potentially use memory to pass intermediate results between steps, rather than writing all those intermediate datasets to disk.

But tools such as OBIEE and ODI use Apache Hive to interface with the Hadoop world, not Pig, so its improvements to Hive that will have the biggest immediate impact on the tools we use today. And what’s interesting is the developments and work thats going on around Hive in this area, with four different “next-generation Hive” initiatives going on that could end-up making OBIEE and ODI on Hadoop run faster:

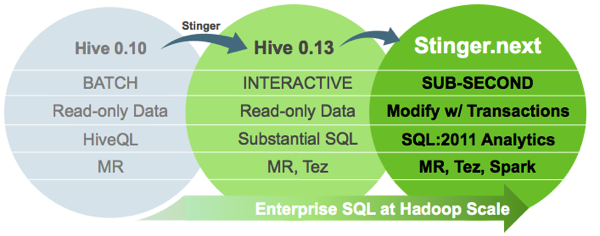

- Hive-on-Tez (or “Stinger”), principally championed by Hortonworks, along with Stinger.next which will enable ACID transactions in HiveQL

- Hive-on-Spark, a more limited port of Hive to run on Spark and backed by Cloudera amongst others

- Spark SQL within Apache Spark, which enables SQL queries against Spark RDDs (and Hive tables), and exposes a HiveServer2-compatible Thrift Server for JDBC access

- Vendor initiatives that build on Hive but are mainly around integration with their RDBMS engines, for example Oracle Big Data SQL

Vendor initiatives like Oracle’s Big Data SQL and Cloudera Impala have the benefit of working now (and are supported), but usually come with some sort of penalty for not working directly within the Hive framework. Oracle’s Big Data SQL, for example, can read data from Hive (very efficiently, using Exadata SmartScan-type technology) but then can’t write-back to Hive, and currently pulls all the Hive data into Oracle if you try and join Oracle and Hive data together. Cloudera’s Impala, on the other hand, is lightening-fast and works directly on the Hadoop platform, but doesn’t support the same ecosystem of SerDes and storage handlers that Hive supports, taking away one of the key flexibility benefits of working with Hive.

So what about the attempts to extend and improve Hive, or include Hive interfaces and compatibility in Spark? In most cases an ETL routine written as a series of Hive statements isn’t going to be as fast or resource-efficient as a custom Spark program, but if we can make Hive run faster or have a Spark application masquerade as a Hive database, we could effectively give OBIEE and ODI on Hadoop a “free” platform performance upgrade without having to change the way they access Hadoop data. So what are these initiatives about, and how usable are they now with OBIEE and ODI?

Probably the most ambitious next-generation Hive project is the Stinger initiative. Backed by Hortonworks and based on the Apache Tez framework that runs on Hadoop 2.0 and YARN. Stinger aimed first to port Hive to run on Tez (which runs MapReduce jobs but enables them to potentially run as a single DAG), and then add ACID transaction capabilities so that you can UPDATE and DELETE from a Hive table as well as INSERT and SELECT, using a transaction model that allows you to roll-back uncommitted changes (diagram from the Hortonworks Stinger.next page)

Tez is more of a set of developer APIs rather than the full data discovery / data analysis platform that Spark aims to provide, but it’s a technology that’s available now as part of Hortonworks HDP2.2 platform and as I showed in the blog post a few days ago, an existing Pig script that you run as-is on a Tez environment typically runs twice as fast as when its using MapReduce to move data around (with usual testing caveats applying, YMMV etc). Hive should be the same as well, giving us the ability to take Hive transformation scripts and run them unchanged except for specifying Tez at the start as the execution engine.

Hive on Tez is probably the first of these initiatives we’ll see working with ODI and OBIEE, as ODI has just been certified for Hortonworks HDP2.1, and the new HDP2.2 release is the one that comes with Tez as an option for Pig and Hive query execution. I’m guessing ODI will need to have its Hive KMs updated to add a new option to select Tez or MapReduce as the underlying Hive execution engine, but otherwise I can see this working “out of the box” once ODI support for HDP2.2 is announced.

Going back to the last of the three blog posts I wrote on going beyond MapReduce, many in the Hadoop industry back Spark as the successor to MapReduce rather than Tez as its a more mature implementation that goes beyond the developer-level APIs that Tez aims to provide to make Pig and Hive scripts run faster. As we’ll see in a moment Spark comes with its own SQL capabilities and a Hive-compatible JDBC interface, but the other “swap-out-the-execution-engine” initiative to improve Hive is Hive on Spark, a port of Hive that allows Spark to be used as Hive’s execution engine instead of just MapReduce.

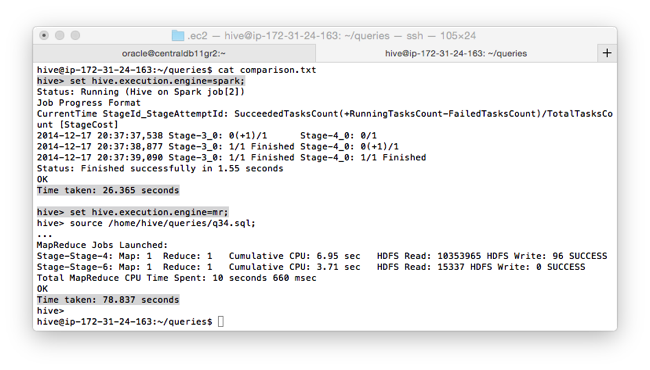

Hive on Spark is at an earlier stage in development than Hive on Tez with the first demo being given at the recent Strata + Hadoop World New York, and specific builds of Spark and versions of Hive needed to get it running. Interestingly though, a post went on the Cloudera Blog a couple of days ago announcing an Amazon AWS AMI machine image that you could use to test Hive on Spark, which though it doesn’t come with a full CDH or HDP installation or features such as a HiveServer JDBC interface, comes with a small TPC-DS dataset and some sample queries that we can use to get a feeling for how it works. I used the AMI image to create an Amazon AWS m3.large instance and gave it a go.

By default, Hive in this demo environment is configured to use Spark as the underlying execution engine. Running a couple of the TPC-DS queries first using this Spark engine, and then switching back to MapReduce by running the command “set hive.execution.engine=mr” within the Hive CLI, I generally found queries using Spark as the execution engine ran 2-3x faster than the MapReduce ones.

You can’t read too much into this timing as the demo AMI is really only to show off the functional features (Hive using Spark as the execution engine) and no work on performance optimisation has been done, but it’s encouraging even at this point that it’s significantly faster than the MapReduce version.

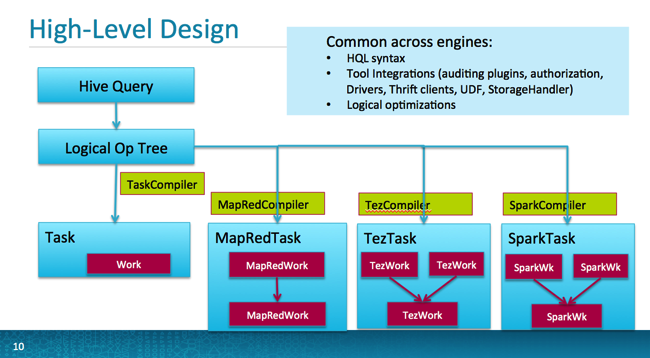

Long-term the objective is to have both Tez and Spark available as options as execution engines under Hive, along with MapReduce, as the diagram below from a presentation by Cloudera’s Szenon Ho shows; the advantage of building on Hive like this rather than creating your own new SQL-on-Hadoop engine is that you can make use of the library of SerDes, storage handlers and so on that you’d otherwise need to recreate for any new tool.

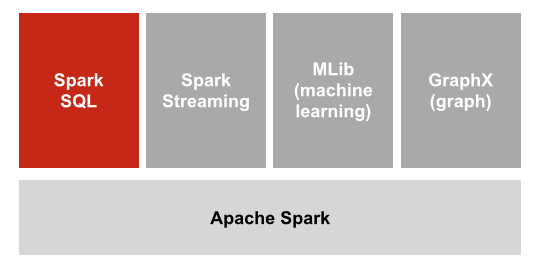

The third major SQL-on-Hadoop initiative I’ve been looking at is Spark SQL within Apache Spark. Unlike Hive on Spark which aims to swap-out the compiler and execution engine parts of Hive but otherwise leave the rest of the product unchanged, Apache Spark as a whole is a much more freeform, flexible data query and analysis environment that’s aimed more at analysts that business users looking to query their dataset using SQL. That said, Spark has some cool SQL and Hive integration features that make it an interesting platform for doing data analysis and ETL.

In my Spark ETL example the other day, I loaded log data and some reference data into RDDs and then filtered and transformed them using a mix of Scala functions and Spark SQL queries. Running on top of the set of core Spark APIs, Spark SQL allows you to register temporary tables within Spark that map onto RDDs, and give you the option of querying your data using either familiar SQL relational operators, or the more functional programming-style Scala language

You can also create connections to the Hive metastore though, and create Hive tables within your Spark application for when you want to persist results to a table rather than work with the temporary tables that Spark SQL usually creates against RDDs. In the code example below, I create a HiveContext as opposed to the sqlContext that I used in the example on the previous blog, and then use that to create a table in my Hive database, running on a Hortonworks HDP2.1 VM with Spark 1.0.0 pre-built for Hadoop 2.4.0:

scala> val hiveContext = new org.apache.spark.sql.hive.HiveContext(sc)

scala> hiveContext.hql("CREATE TABLE posts_hive (post_id int, title string, postdate string, post_type string, author string, post_name string, generated_url string) row format delimited fields terminated by '|' stored as textfile")

scala> hiveContext.hql("LOAD DATA INPATH '/user/root/posts.psv' INTO TABLE posts_hive")

If I then go into the Hive CLI, I can see this new table listed there alongside the other ones:

hive> show tables; OK dummy posts posts2 posts_hive sample_07 sample_08 src testtable2 Time taken: 0.536 seconds, Fetched: 8 row(s)

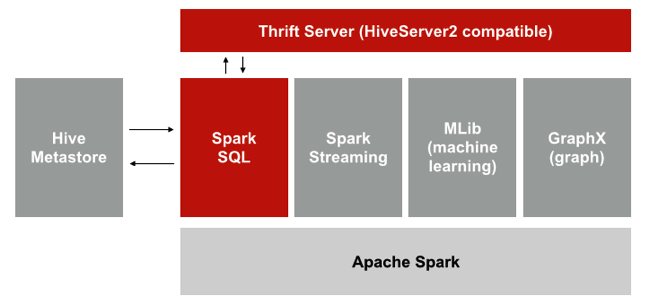

What’s even more interesting is that Spark also comes with a HiveServer2-compatible Thrift Server, making it possible for tools such as ODI that connect to Hive via JDBC to run Hive queries through Spark, with the Hive metastore providing the metadata but Spark running as the execution engine.

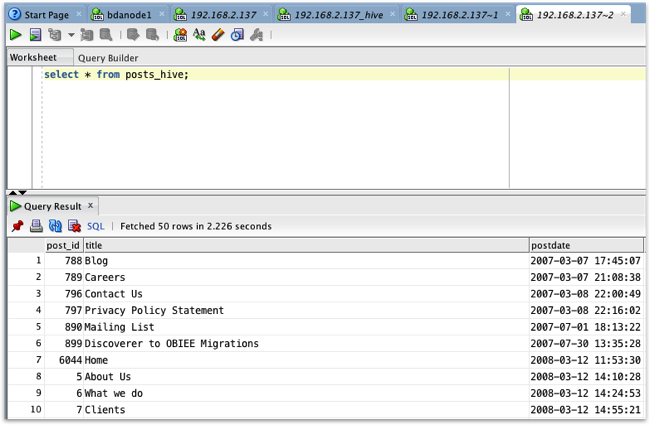

This is subtly different to Hive-on-Spark as Hive’s metastore, support for SerDes and storage handlers runs under the covers but Spark provides you with a full programmatic environment, making it possible to just expose Hive tables through the Spark layer, or mix and match data from RDDs, Hive tables and other sources before storing and then exposing the results through the Hive SQL interface. For example then, you could use Oracle SQL*Developer 4.1 with the Cloudera Hive JDBC drivers to connect to this Spark SQL Thrift Server and query the tables just like any other Hive source, but crucially the Hive execution is being done by Spark, rather than MapReduce as would normally happen.

Like Hive-on-Spark, Spark SQL and Hive support within Spark SQL are at early stages, with Spark SQL not yet being supported by Cloudera whereas the core Spark API is. From the work I’ve done with it, it’s not yet possible to expose Spark SQL temporary tables through the HiveServer2 Thrift Server interface, and I can’t see a way of creating Hive tables out of RDDs unless you stage the RDD data to a file in-between. But it’s clearly a promising technology and if it becomes possible to seamlessly combine RDD data and Hive data, and expose Spark RDDs registered as tables through the HiveServer2 JDBC interface it could make Spark a very compelling platform for BI and data analyst-type applications. Oracle’s David Allen, for example, blogged about using Spark and the Spark SQL Thrift Server interface to connect ODI to Hive through Spark, and I’d imagine it’d be possible to use the Cloudera HiveServer2 ODBC drivers along with the Windows version of OBIEE 11.1.1.7 to connect to Spark in this way too - if I get some spare time over the Christmas break I’ll try and get an example working.