OAC: Essbase – Incremental Loads / Automation

I recently detailed data load possibilities with the tools provided with Essbase under OAC here. Whilst all very usable, my thoughts turned to systems that I have worked on and how the loads currently work, which led to how you might perform incremental and / or automated loads for OAC Essbase.

A few background points:

- The OAC front end and EssCS command line tools contain a ‘clear’ option for data, but both are full data clears – there does not seem to be a partial or specifiable ‘clear’ available.

- The OAC front end and EssCS command line tools contain a ‘file upload’ function for (amongst other things) data, rules, and MAXL (msh) script files. Whilst the front-end operation has the ability to overwrite existing files, the EssCS Upload facility (which would be used when trying to script a load) seemingly does not – if an attempt is made to upload a file that already exists, an error is shown.

- The OAC ‘Job’ facility enables a data load to be conducted with a rules file; the EssCS Dataload function (which would be used when trying to script a load) seemingly does not.

- MAXL still exists in OAC, so it is possible to operate at Essbase ‘command level’

Whilst the tools that are in place all work well and are fine for migration or other manual / adhoc activity, I am not sure what the intended practice might be around some ‘real world’ use cases: a couple of things that spring to mind are

- Incremental loads

- Scheduled loads

- Large ASO loads (using buffers)

Incremental Loads

It is arguably possible to perform an incremental load in that

- A rules file can be crafted in on-prem and uploaded to OAC (along with a partial datafile)

- Loads appear to be conducted in overwrite mode, meaning changed and new records will be handled ok

It is possible that (eg) a ‘current month’ data file could be loaded and reloaded to form an incremental load of sorts. The problem here will come if data is deleted for a particular member combination in the source from one day to the next – with no partial clear (eg, of current month data) seemingly possible, there is no way of clearing redundant values (at least for an ASO cube…for a BSO load, the ‘Clear combinations’ functionality of the load rules file can be used…although that has not yet been tested on this version).

So in the case of an ASO cube, the only option using available tools would be to ensure that ‘contra’ records are added to the incremental load file. This is not ideal, as it is another process to follow in data preparation, and would also add unnecessary zeros to the cube. For these reasons, I would generally look to effect a partial clear of the ‘slice’ being loaded before proceeding with an incremental the load.

The only way I can see of achieving this under OAC would be to take advantage of the fact that MAXL is available and effect the clear using alter database clear data.

This means that the steps required might be

- Upload prepared incremental data file (either manually via OAC or via EssCS UploadFiles after having first deleted the existing file)

- Upload on-prem prepared rules file (either manually via OAC or via EssCS UploadFiles after having first deleted the existing file)

- Access the OAC server (eg via Putty), start MAXL, and run a command to clear the required slice / merge slices (if necessary)

- In OAC, create / run a job for the specified data file / rules file

I may have missed something, but I see no obvious way of being able to automate this process with the on-board facilities.

Automating the load process

Along with the points listed above, some other facts to be aware of:

- It is possible to manually transfer files to OAC using FTP

- It is possible to amend the cron scheduler for the oracle user in OAC

Even bearing in mind the above, I should caveat this section by saying getting ‘under the hood’ in this way is possibly not supported or recommended, and should only be undertaken at your own risk.

Having said that…

By taking advantage of the availability of FTP and cron, it should be possible to script a solution that can run unattended, for full and incremental loads. Furthermore, data clears (full or partial) can be included in the same process, as could parallel buffer loading for ASO or any other MAXL-controllable process (within the confines of this version of Essbase).

The OAC environment

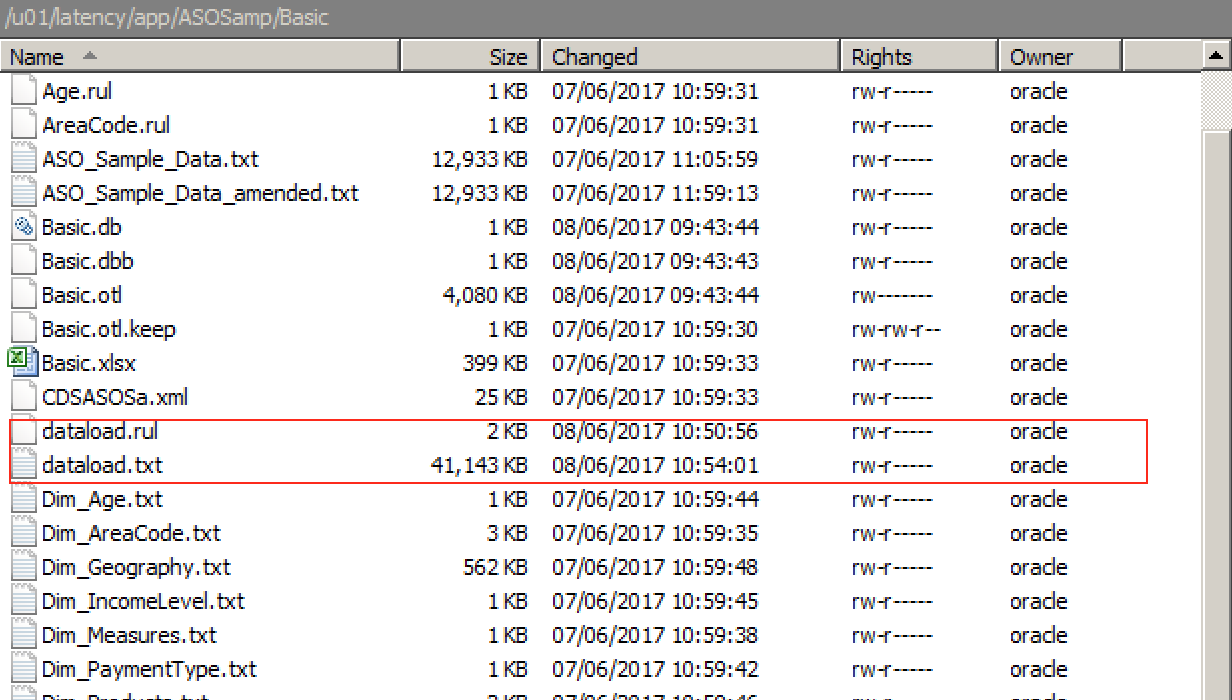

A quick look around discloses that the /u01/latency directory is roughly the equivalent of the ../user_projects/epmsystem1/EssbaseServer/essbaseserver1 (or equivalent) directory in an on-prem release in that it contains the /app ‘parent’ directory which in turn contains a subdirectory structure for all application and cube artefacts. Examining this directory for ASOSamp.Basic shows that the uploaded dataload.* files are here, along with all other files listed by the Files screen of OAC:

Note that remote connection is via the opc user, but this can be changed to oracle once connected (by using sudo su – oracle).

As oracle, these files can be manually deleted…doing so means they will no longer be found by the EssCS Listfiles command or the Files screen within OAC (once refreshed). If deleted manually, new versions of the files can be re-uploaded via either of the methods detailed above (whilst an overwrite option exists in the OAC Files facility, there seems to be no such option with the EssCS Upload feature…trying to upload a file that already exists results in an error.

All files are owned by the oracle user, with no access rights at all for the opc user that effects a remote connection via FTP.

Automation: Objectives

The objective of this exercise was to come up with a method that, unattended, would:

- Upload received files (data, rules) to OAC from a local source

- Put them in the correct OAC directory in a usable format

- Invoke a process that runs a pre-load process (eg a clear), a load, and (if necessary a post load process)

- Clear up after itself

Automation: The Process

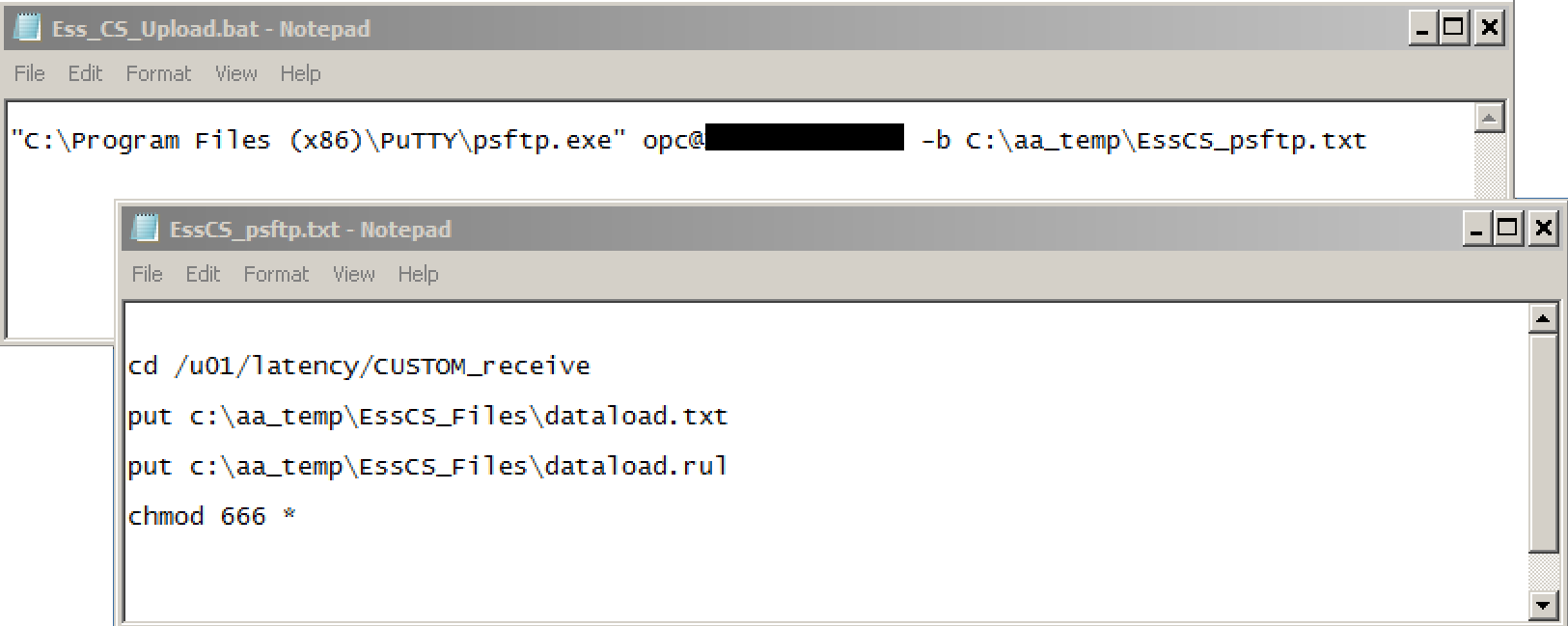

The first job is to handle the upload of files to OAC. This could be achieved via a psftp script that uploads the entire contents of a nominated local directory:

The Ess_CS_Upload,bat script above (which can, of course be added to a local scheduler so that it runs unattended at appointed times) passes a pre-scripted file to psftp to connect and transfer the files. Note that the opc user is used for the connection, and the files are posted to a custom-created directory, CUSTOM_receive (under the existing /u01/latency). The transferred files are also given a global ‘rw’ attribute to assist with later processing

Now the files are in the OAC environment, control is taken up there.

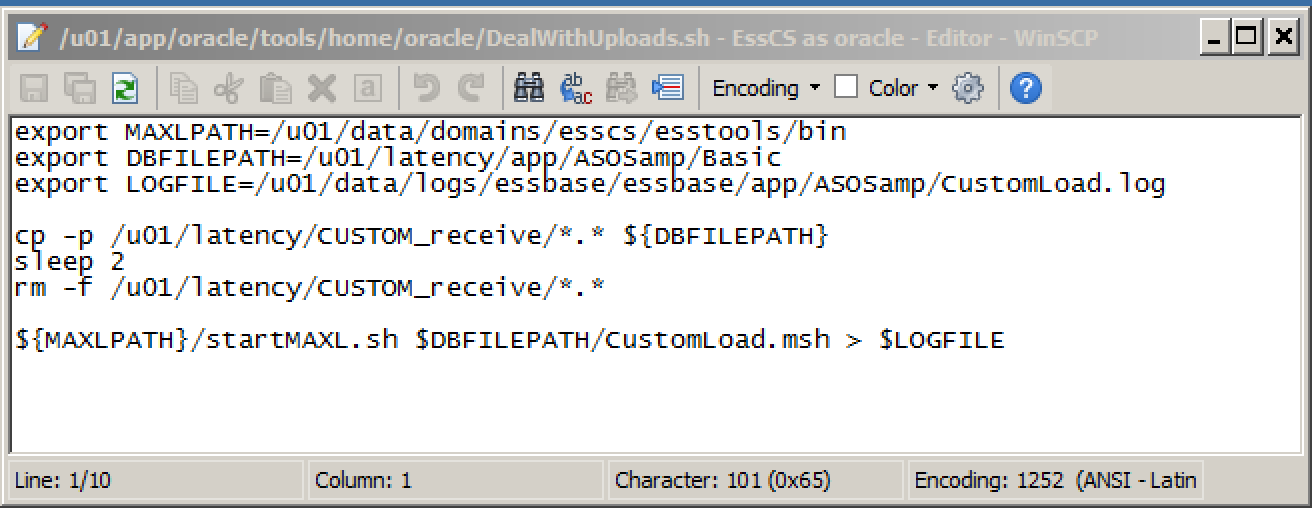

A shell script (DealWithUploads) is added to the oracle home directory:

This copies all the files in the nominated receiving directory to the actual required location – in this case, the main ASOSamp/Basic directory. Note the use of ‘-p’ with the copy command to ensure that attributes (ie, the global ‘rw’) are retained. Once copied, the files are deleted from the receiving directory so that they are not processed again.

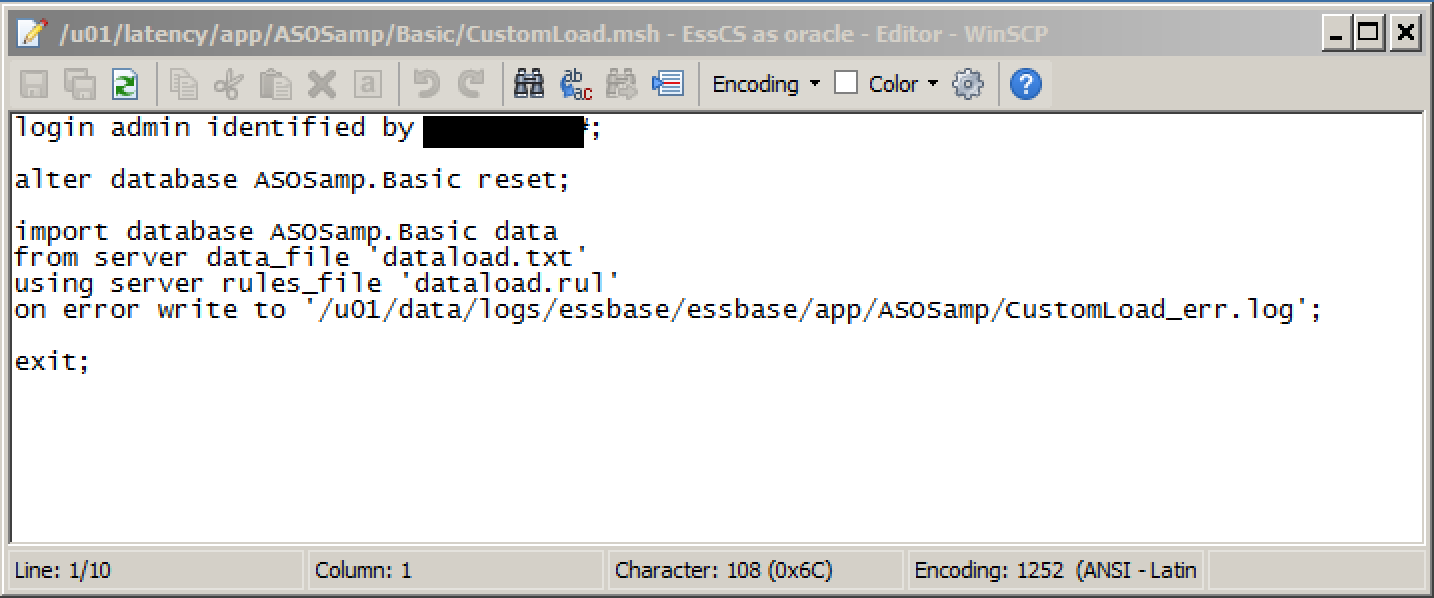

Once the files are copied into place, startMAXL is used to invoke a pre-prepared msh script:

as can be seen, this clears the cube and re-imports from the uploaded file using the uploaded rules file. The clear here is a full reset, but a partial clear (in the case of ASO) can be used here instead if required

As with the ‘local’ half of the method, the DealWithUploads.sh script file can be added to the scheduler on OAC: the existing cron entries are already held in the file /u01/app/oracle/tools/home/oracle/crontab.txt; it is a simple exercise to schedule a call to this new custom script.

A routine such as this would need a good degree of refinement and hardening – the file lists for the transfers should be self-building, passwords need to be encrypted, the MAXL script should only be called if required, the posting locations for files should be content/context sensitive, etc – but in terms of feasibility testing the requirements listed above, it was successful.

This approach places additional directories and files in an environment / structure that could be maintained at any time: it is therefore imperative that some form of code control / release mechanism is employed so that it can be replaced in the event of any unexpected / uncontrollable maintenance taking place on the OAC environment that could invalidate or remove it.

Even once hardened, I think there is a considerable weak spot in this approach in that the rules file seemingly has to be crafted in an on-prem environment and uploaded: as I detailed here, even freshly-uploaded, working rules files error when an attempt is made to verify them. For now, I’ll keep looking for an alternative.

Summary

Whilst a lot of the high-level functionality is in place around data loads, often with multiple methods, I think there are a couple of detailed functionality areas that may currently require workarounds – to my mind, the addition of the ability to select & run an msh format ‘preload’ script when running a dataload Job (eg for clears) would be useful, whilst a fully functional rules file editor strikes me as important. The fact that an FTP connection is available at all is a bonus, but because this is as a non-oracle user, it is not possible to put a file in the correct place directly - the EssCS Upload faciity does this of course, but the seeming absence of an overwrite option or an additional Delete option for EssCS) somewhat limits its usefulness at this point. But can you implement a non attended, scheduled load or incremental load routine ? Sure you can.