Real-time Sailing Yacht Performance - Getting Started (Part 1)

In this series of articles, I intend to look at collecting and analysing our yacht’s data. I aim to show how a number of technologies can be used to achieve this and the thought processes around the build and exploration of the data. Ultimately, I want to improve our sailing performance with data, not a new concept for professional teams but well I have a limited amount of hardware and funds, unlike Oracle it seems, time for a bit of DIY!

In this article, I introduce some concepts and terms then I'll start cleaning and exploring the data.

Background

I have owned a Sigma 400 sailing yacht for over twelve years and she is used primarily for cruising but every now and then we do a bit of offshore racing.

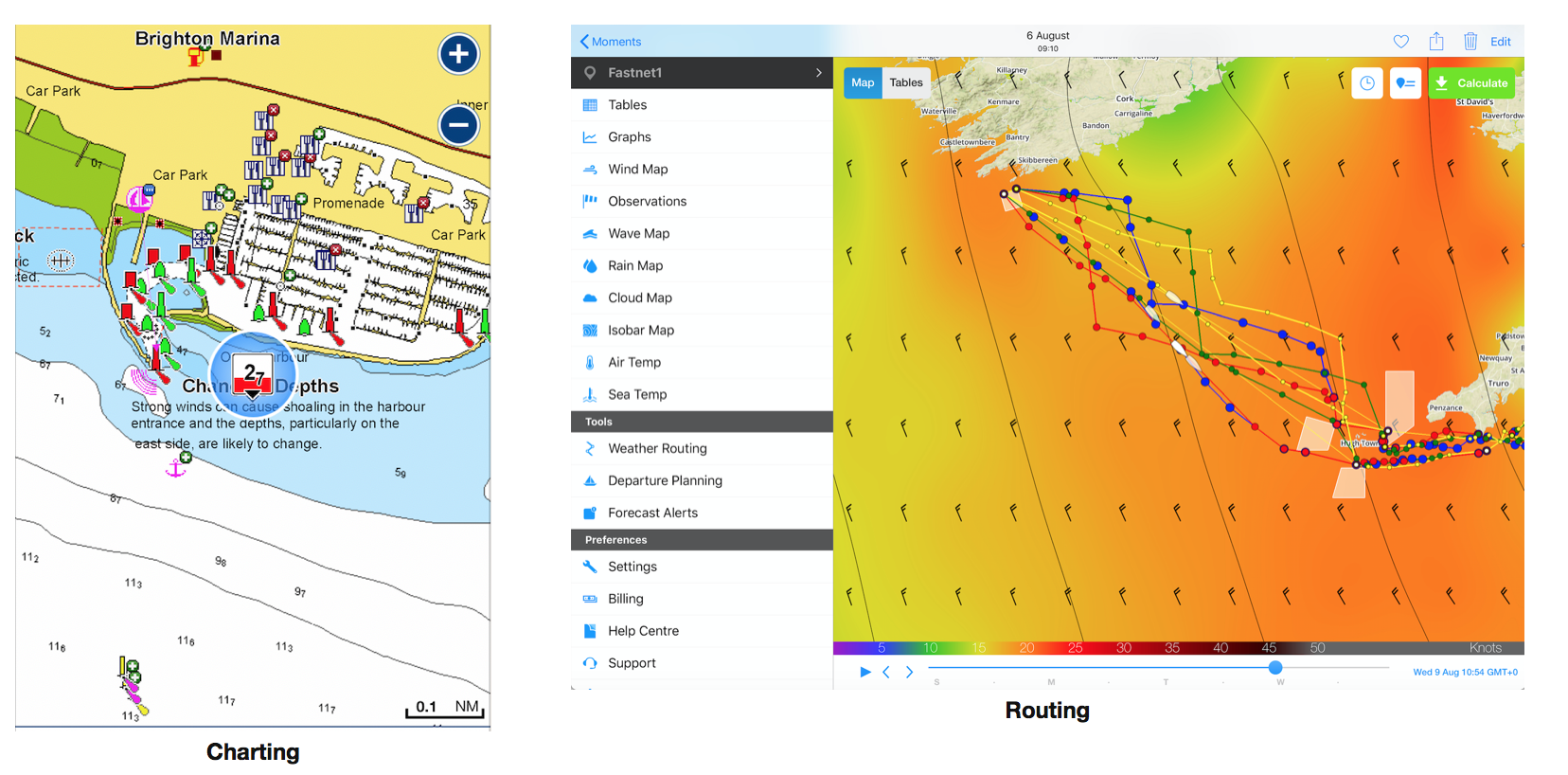

In the last few years we have moved from paper charts and a very much manual way of life to electronic charts and IOS apps for navigation.

In 2017 we started to use weather modelling software to predict the most optimal route of a passage taking wind, tide and estimated boat performance (polars) into consideration.

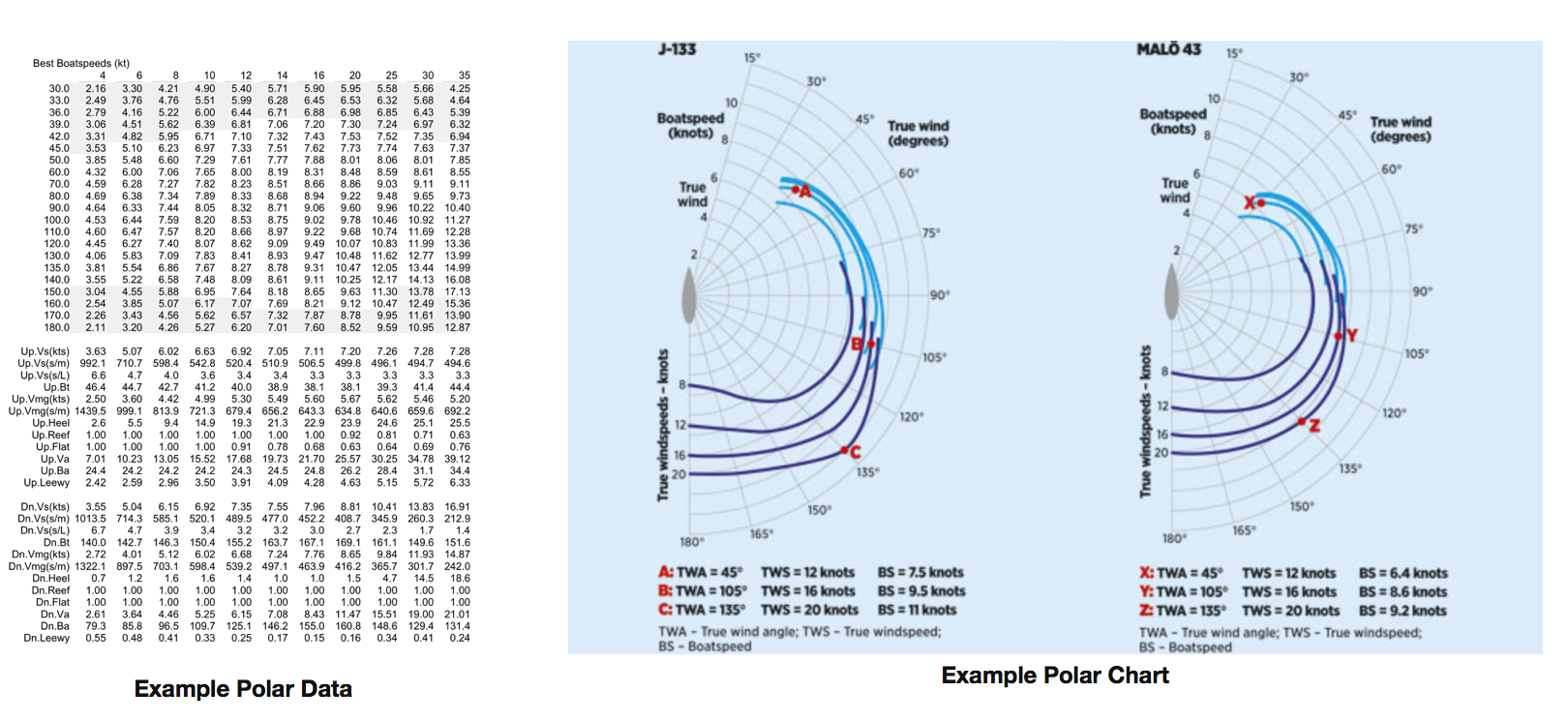

The predicted routes are driven in part by a boat's polars, the original "polars" are a set of theoretical calculations created by the boat’s designer indicating/defining what the boat should do at each wind speed and angle of sailing. Polars give us a plot of the boat's speed given a true wind speed and angle. This in turn informs us of the optimal speed the boat could achieve at any particular angle to wind and wind speed (not taking into consideration helming accuracy, sea state, condition of sails and sail trim - It may be possible for me to derive different polars for different weather conditions). Fundamentally, polars will also give us an indication of the most optimal angle to wind to get to our destination (velocity made good).

The polars we use at the moment are based on a similar boat to the Sigma 400 but are really a best guess. I want our polars to be more accurate. I would also like to start tracking the boats performance real-time and post passage for further analysis.

The purpose of this blog is to use our boats instrument data to create accurate polars for a number of conditions and get a better understanding of our boats performance at each point of sail. I would also see what can be achieved with the AIS data. I intend to use Python to check and decode the data. I will look at a number of tools to store, buffer, visualise and analyse the outputs.

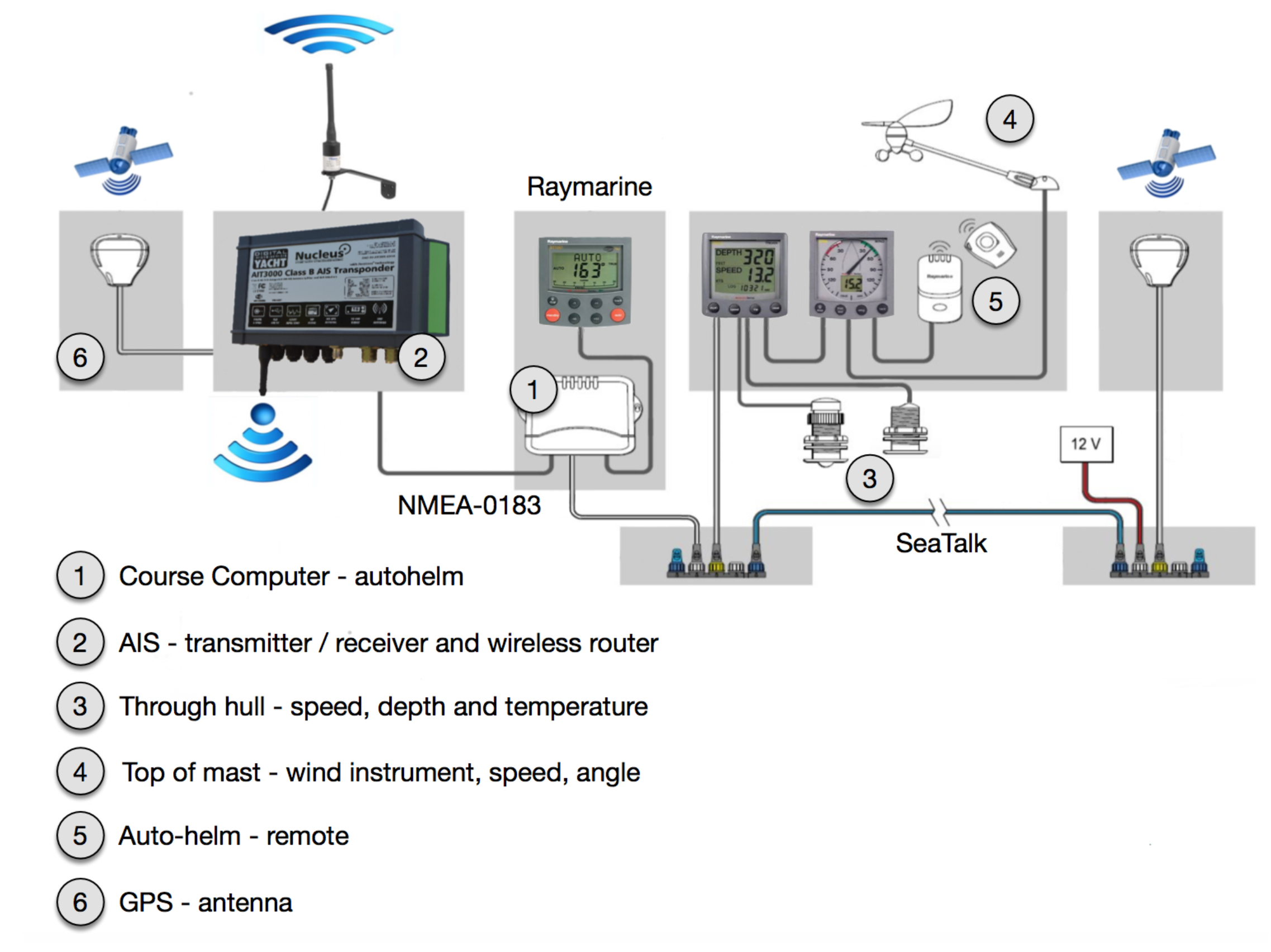

So let’s look at the technology on-board.

Instrumentation Architecture

The instruments are by Raymarine. We have a wind vane, GPS, speed sensor, depth sounder and sea temperature gauge, electronic compass, gyroscope, and rudder angle reader. These are all fed into a central course computer. Some of the instrument displays share and enrich the data calculating such things as apparent wind angles as an example. All the data travels through a proprietary Raymarine messaging system called SeaTalk. To allow Raymarine instruments to interact with other instrumentation there is an NMEA-0183 port. NMEA-0183 is a well-known communication protocol and is fairly well documented so this is the data I need to extract from the system. I currently have an NMEA-0183 cable connecting the Raymarine instruments to an AIS transponder. The AIS transponder includes a Wireless router. The wireless router enables me to connect portable devices to the instrumentation.

The first task is to start looking at the data and get an understanding of what needs to be done before I can start analysing.

Analysing the data

There is a USB connection from the AIS hub however the instructions do warn that this should only be used during installation. I did spool some data from the USB port, it seemed to work OK. I could connect directly to the NMEA-0183 output however that would require me to do some wiring so will look at that if the reliability of the wireless causes issues. The second option was to use the wireless connection. I start by spooling the data to a log file using nc (nc is basically OSX's version of netcat, a TCP and UDP tool).

Spooling the data to a log file

nc -p 1234 192.168.1.1 2000 > instrument.log

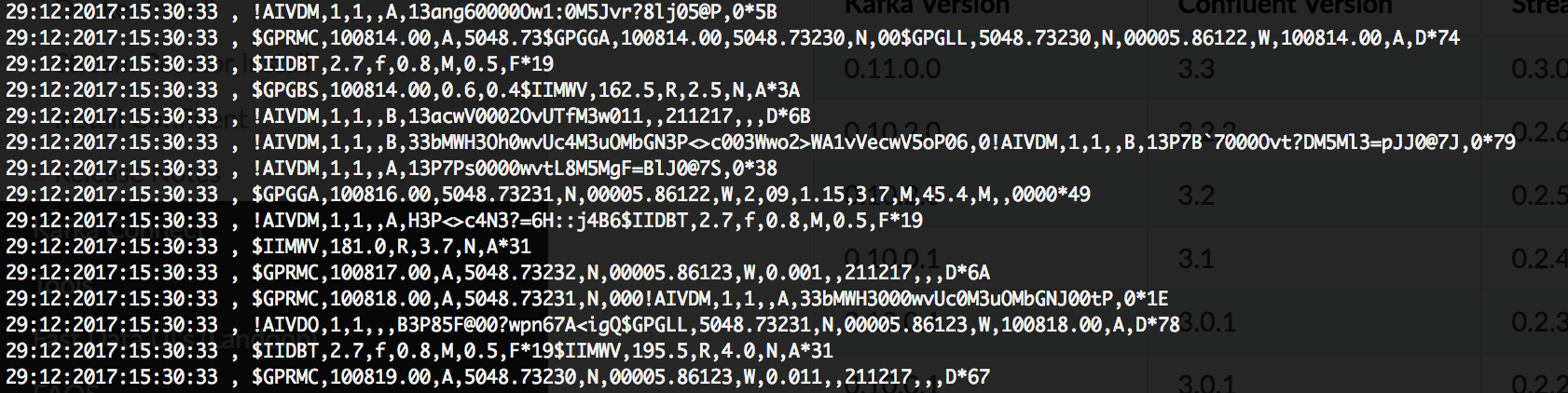

The spooled data gave me a clear indication that there would need to be some sanity checking of the data before it would be useful. The data is split into a number of different message types each consisting of a different structure. I will convert these messages into a JSON format so that the messages are more readable downstream. In the example below the timestamps displayed are attached using awk but my Python script will handle any enrichment as I build out.

The data is comma separated so this makes things easy and there a number of good websites that describe the contents of the messages. Looking at the data using a series of awk commands I clearly identify three main types of messages. GPS, AIS and Integrated instrument messages. Each message ends in a two-digit hex code this can be XOR'd to validate the message.

Looking at an example wind messages

We get two messages related to the wind true and apparent the data is the same because the boat was stationary.

$IIMWV,180.0,R,3.7,N,A*30

$IIMWV,180.0,T,3.8,N,A*30These are Integrated Instrument Mast Wind Vain (IIMWV) * I have made an assumption about the meaning of M so if you are an expert in these messages feel free to correct me ;-)*

These messages break down to:

- $IIMWV II Talker, MWV Sentence

- 180.0 Wind Angle 0 - 359

- R Relative (T = True)

- 3.7 Wind Speed

- N Wind Speed Units Knots (N = KPH, M = MPH)

- A Status (A= Valid)

- *30 Checksums

And in English (ish)

180.0 Degrees Relative wind speed 1.9 Knots.

Example corrupted message

$GPRMC,100851.00,A,5048.73249,N,00005.86148,W,0.01**$GPGGA**,100851.00,5048.73249,N,00005.8614$GPGLL,5048.73249,N,00005.86148,W,100851.0Looks like the message failed to get a new line. I notice a number of other types of incomplete or corrupted messages so checking them will be an essential part of the build.

Creating a message reader

I don't really want to sit on the boat building code. I need to be doing this while traveling and at home when I get time. So, spooling half an hour of data to a log file gets me started. I can use Python to read from the file and once up and running spool the log file to a local TCP/IP port and read using Python socket library.

Firstly, I read the log file and loop through the messages, each message I check to see if it's valid using the checksum, line length. I used this to log the number of messages in error etc. I have posted the test function, I'm sure there are better ways to write the code but it works.

#DEF Function to test message

def is_message_valid (orig_line):

#check if hash is valid

#set variables

x = 1

check = 0

received_checksum = 0

line_length = len(orig_line.strip())

while (x <= line_length):

current_char = orig_line[x]

#checksum is always two chars after the *

if current_char == "*":

received_checksum = orig_line[x+1] + orig_line[x+2]

#check where we are if there is more to decode then

#have to take into account new line

if line_length > (x+3):

check = 0

#no need to continue to the end of the

#line either error or have checksum

break

check = check^ord(current_char)

x = x + 1;

if format(check,"2X") == received_checksum:

#substring the new line for printing

#print "Processed nmea line >> " + orig_line[:-1] + " Valid message"

_Valid = 1

else:

#substring the new line for printing

_Valid = 0

return _ValidNow for the translation of messages. There are a number of example Python packages in GitHub that translate NMEA messages but I am only currently interested in specific messages, I also want to build appropriate JSON so feel I am better writing this from scratch. Python has JSON libraries so fairly straight forward once the message is defined. I start by looking at the wind and depth messages. I'm not currently seeing any speed messages hopefully because the boat wasn't moving.

def convert_iimwv_json (orig_line):

#iimwv wind instrumentation

column_list = orig_line.split(",")

#star separates the checksum from status

status_check_sum = column_list[5].split("*")

checksum_value = status_check_sum[1]

json_str =

{'message_type' : column_list[0],

'wind_angle' : column_list[1],

'relative' : column_list[2],

'wind_speed' : column_list[3],

'wind_speed_units' : column_list[4],

'status' : status_check_sum[0],

'checksum' : checksum_value[0:2]}

json_dmp = json.dumps(json_str)

json_obj = json.loads(json_dmp)

return json_strI now have a way of checking, reading and converting the message to JSON from a log file. Switching from reading a file to to using the Python socket library I can read the stream directly from a TCP/IP port. Using nc it's possible to simulate the message being sent from the instruments by piping the log file to a port.

Opening port 1234 and listening for terminal input

nc -l 1234Having spoken to some experts from Digital Yachts it maybe that the missing messages are because Raymarine SeakTalk is not transmitting an NMEA message for speed and a number of other readings. The way I have wired up the NMEA inputs and outputs to the AIS hub may also be causing the doubling up of messages and apparent corruptions. I need more kit! A bi-direction SeaTalk to NMEA converter.

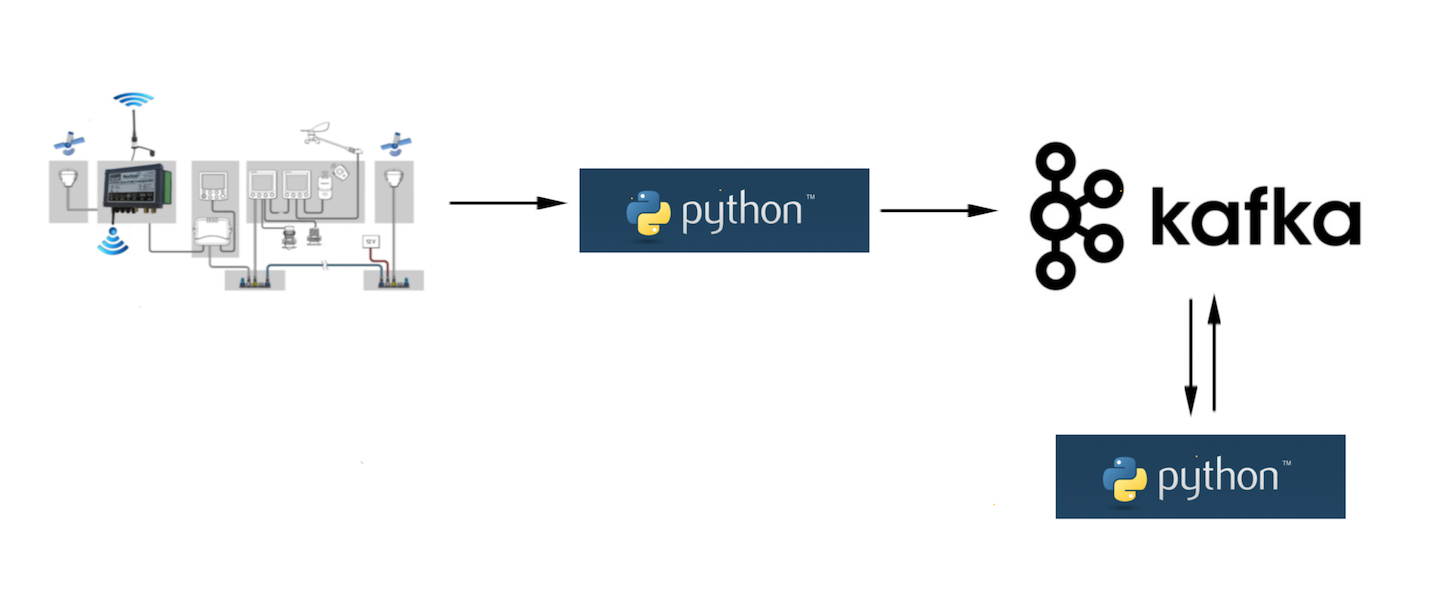

In the next article, I discuss the use of Kafka in the architecture. I want to buffer all my incoming raw messages. If I store all the incoming I can build out the analytics over time i.e as I decode each message type. I will also set about creating a near real time dashboard to display the incoming metrics. The use of Kafka will give me scalability in the model. I'm particularly thinking of Round the Island Race 1,800 boats a good number of these will be transmitting AIS data.

Real-time Sailing Yacht Performance - stepping back a bit (Part 1.1)